When AI Agents Ship by Default, Security Has to Run in Production — What’s New in Defend AI

AI agents are shipping by default. Learn how Defend AI delivers production-grade agentic security with real-world detections, accuracy, and research-driven coverage.

Enterprises are scaling agents, and security teams are seeing a new class of risk

Enterprises aren’t choosing whether to deploy agents; vendors (including Microsoft) are embedding them by default across browsers, developer tools, SaaS platforms, and internal systems. Security teams don’t get to opt out. The only choice left is whether those agents run without guardrails, or with production-grade controls.

Across industries, enterprises are moving from chatbots to AI agents that browse, use tools, pull enterprise context, and take actions in real systems. Customers are deploying:

- Browser agents that navigate dynamic web content and follow embedded instructions

- Coding agents that operate on repositories and toolchains

- Tool-heavy enterprise agents that retrieve internal knowledge and execute workflows through connectors

Security and platform teams told us the same thing: AI agents aren’t just exposed to risk—they introduce risk.

AI agents are becoming an execution surface. The threat isn’t a single bad prompt. It’s what happens when hidden instructions ride along in trusted content, when “allowed” tools are combined in unintended ways, and when multi-step agent workflows quietly form exploit chains.

If agents are now unavoidable, security has to work at runtime. This last release for 2025 is built around what customers asked for most:

- New detections for agentic-specific threats

- Better detection efficacy so teams can run in production without noise

- AI security research that keeps coverage improving as agents evolve

What’s new in Defend AI from Straiker

1) Detecting Real-World Agentic Attacks Across Context, Tools, and Actions

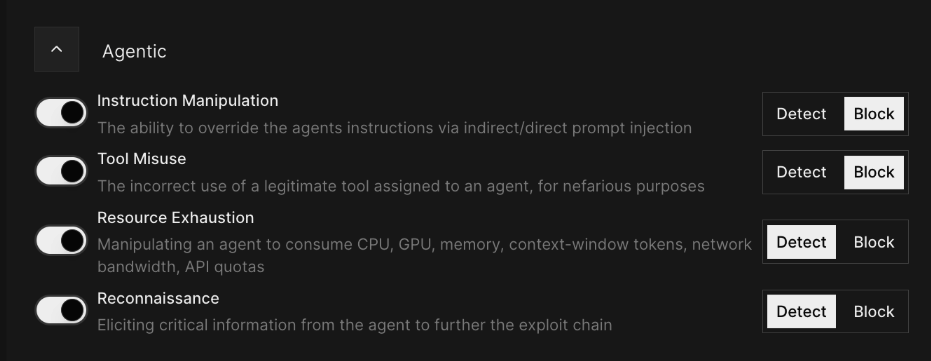

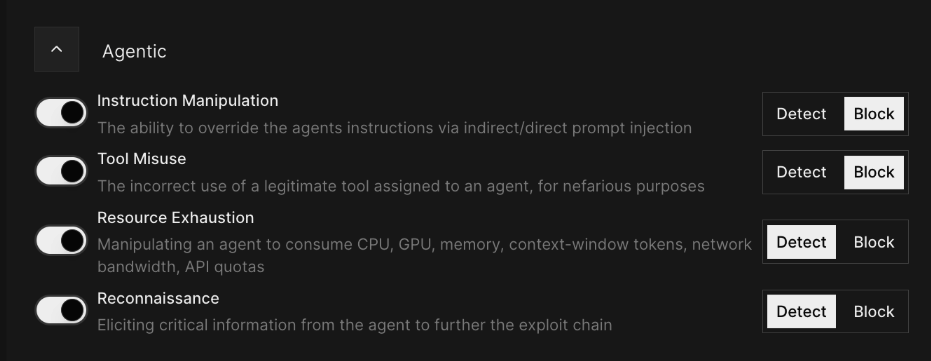

Customers asked us for coverage that reflects how agent attacks actually show up: not as obvious malware, but as manipulation across context, tools, and actions. This release adds four agentic detections:

Instruction Manipulation

- Customer problem: Enterprises see attackers (or untrusted content) trying to override the agent’s instructions — via direct or indirect prompt injection embedded in web pages, documents, tickets, or tool outputs.

- Defend AI value: Detects attempts to redirect or supersede the agent’s intended behavior before downstream actions occur.

Tool Misuse

- Customer problem: Security teams reported a growing risk where agents can misuse a legitimate tool they’re allowed to call, but for nefarious purposes (exfiltration, privilege abuse, unsafe actions).

- Defend AI value: Flags cases where tool usage is inconsistent with the intent and policy boundaries customers set for safe operation.

Resource Exhaustion

- Customer problem: Platform teams are seeing reliability and cost issues when agents are manipulated into consuming excessive CPU/GPU, memory, context-window tokens, network bandwidth, or API quotas.

- Defend AI value: Detects exhaustion patterns early to help teams prevent incidents, runaway cost, and degraded service.

Reconnaissance

- Customer problem: Customers told us attackers often begin with “harmless” probing such as eliciting system details, tool inventory, connector behavior, or access boundaries to set up a larger exploit chain.

- Defend AI value: Detects reconnaissance behavior so teams can stop the chain earlier, before higher-impact steps.

2) Accuracy That Makes Agentic Guardrails Practical at Scale

Customers were clear: shipping detections is only half the job — the other half is making them accurate enough for production.

This release improves detection efficacy (accuracy) across the four categories above:

- Better catch rate on real agentic attack patterns (especially multi-step / tool-mediated behaviors)

- Lower false positives, so security teams don’t get stuck reviewing low-signal alerts

Why this matters: agentic security is harder and more complex than simple I/O scanning. Risk is often distributed across context + tool outputs + action selection, rather than being obvious in a single input or output.

Customer value: This means fewer missed threats, fewer noisy alerts, and faster triage, which enables broader, safer agent rollout.

3) Research-Driven Detections That Stay Ahead of Emerging Agentic Attacks

Enterprises asked us to keep pace with the way agents are changing: new tools, new workflows, new interaction patterns. That’s why this release reinforces the research loop that drives continual improvement for detections and efficacy.

Our research investments directly power:

- Expanded attack coverage for the four detection controls: instruction manipulation, tool misuse, resource exhaustion, and reconnaissance

- Higher-quality golden datasets reflecting real enterprise agent workflows

- Testing across different agent types, including browser agents, coding agents, and tool-heavy enterprise agents

We won’t go deep into methodology here; the key point customers care about is outcomes: coverage that doesn’t stagnate and detections that keep improving as agents adopt new capabilities.

These updates are included in Defend AI for customers running agentic workloads and tool-using applications.

We’re here to help you on your agentic journey. Reach out so we can do a short Agentic Threat Assessment where you’ll get information that maps your agent surfaces (tools, browsing, connectors), identifies top failure modes, and enables the relevant detections + enforcement points.

Enterprises are scaling agents, and security teams are seeing a new class of risk

Enterprises aren’t choosing whether to deploy agents; vendors (including Microsoft) are embedding them by default across browsers, developer tools, SaaS platforms, and internal systems. Security teams don’t get to opt out. The only choice left is whether those agents run without guardrails, or with production-grade controls.

Across industries, enterprises are moving from chatbots to AI agents that browse, use tools, pull enterprise context, and take actions in real systems. Customers are deploying:

- Browser agents that navigate dynamic web content and follow embedded instructions

- Coding agents that operate on repositories and toolchains

- Tool-heavy enterprise agents that retrieve internal knowledge and execute workflows through connectors

Security and platform teams told us the same thing: AI agents aren’t just exposed to risk—they introduce risk.

AI agents are becoming an execution surface. The threat isn’t a single bad prompt. It’s what happens when hidden instructions ride along in trusted content, when “allowed” tools are combined in unintended ways, and when multi-step agent workflows quietly form exploit chains.

If agents are now unavoidable, security has to work at runtime. This last release for 2025 is built around what customers asked for most:

- New detections for agentic-specific threats

- Better detection efficacy so teams can run in production without noise

- AI security research that keeps coverage improving as agents evolve

What’s new in Defend AI from Straiker

1) Detecting Real-World Agentic Attacks Across Context, Tools, and Actions

Customers asked us for coverage that reflects how agent attacks actually show up: not as obvious malware, but as manipulation across context, tools, and actions. This release adds four agentic detections:

Instruction Manipulation

- Customer problem: Enterprises see attackers (or untrusted content) trying to override the agent’s instructions — via direct or indirect prompt injection embedded in web pages, documents, tickets, or tool outputs.

- Defend AI value: Detects attempts to redirect or supersede the agent’s intended behavior before downstream actions occur.

Tool Misuse

- Customer problem: Security teams reported a growing risk where agents can misuse a legitimate tool they’re allowed to call, but for nefarious purposes (exfiltration, privilege abuse, unsafe actions).

- Defend AI value: Flags cases where tool usage is inconsistent with the intent and policy boundaries customers set for safe operation.

Resource Exhaustion

- Customer problem: Platform teams are seeing reliability and cost issues when agents are manipulated into consuming excessive CPU/GPU, memory, context-window tokens, network bandwidth, or API quotas.

- Defend AI value: Detects exhaustion patterns early to help teams prevent incidents, runaway cost, and degraded service.

Reconnaissance

- Customer problem: Customers told us attackers often begin with “harmless” probing such as eliciting system details, tool inventory, connector behavior, or access boundaries to set up a larger exploit chain.

- Defend AI value: Detects reconnaissance behavior so teams can stop the chain earlier, before higher-impact steps.

2) Accuracy That Makes Agentic Guardrails Practical at Scale

Customers were clear: shipping detections is only half the job — the other half is making them accurate enough for production.

This release improves detection efficacy (accuracy) across the four categories above:

- Better catch rate on real agentic attack patterns (especially multi-step / tool-mediated behaviors)

- Lower false positives, so security teams don’t get stuck reviewing low-signal alerts

Why this matters: agentic security is harder and more complex than simple I/O scanning. Risk is often distributed across context + tool outputs + action selection, rather than being obvious in a single input or output.

Customer value: This means fewer missed threats, fewer noisy alerts, and faster triage, which enables broader, safer agent rollout.

3) Research-Driven Detections That Stay Ahead of Emerging Agentic Attacks

Enterprises asked us to keep pace with the way agents are changing: new tools, new workflows, new interaction patterns. That’s why this release reinforces the research loop that drives continual improvement for detections and efficacy.

Our research investments directly power:

- Expanded attack coverage for the four detection controls: instruction manipulation, tool misuse, resource exhaustion, and reconnaissance

- Higher-quality golden datasets reflecting real enterprise agent workflows

- Testing across different agent types, including browser agents, coding agents, and tool-heavy enterprise agents

We won’t go deep into methodology here; the key point customers care about is outcomes: coverage that doesn’t stagnate and detections that keep improving as agents adopt new capabilities.

These updates are included in Defend AI for customers running agentic workloads and tool-using applications.

We’re here to help you on your agentic journey. Reach out so we can do a short Agentic Threat Assessment where you’ll get information that maps your agent surfaces (tools, browsing, connectors), identifies top failure modes, and enables the relevant detections + enforcement points.

Related Resources

Click to Open File

similar resources

Secure your agentic AI and AI-native application journey with Straiker

.avif)