Industries

Agentic AI Security for Banks & Financial Institutions

Your AI agents have access to customer data, trading systems, and financial workflows. Prevent tool misuse, unauthorized transactions, and data exposure while maintaining PCI-DSS and NIST AI RMF compliance in real time.

Problem

Traditional security tools weren't built to detect AI agents that misuse tools, go out of their intended scope, or expose customer data.

Solution

Security that monitors every agent decision, validates tool usage against business rules, and maintains continuous compliance enables teams to deploy AI without risk or delay.

Why Banks & Financial Services Institute (FSI) Need Agentic AI Security

High usage of AI in Fraud Detection

85% of financial firms are actively deploying AI in fraud detection, operations, and risk modeling

Resources Global Professionals, 2025

$670K Breach costs

Shadow AI breaches cost $670K more than regular breaches

IBM Cost of a Data Breach Report, 2025

New trend in fraud attacks

Advanced fraud attacks surged 180% in 2025 using GenAI

Research from Sumsub, 2025

Critical security gaps in AI agents for banking and FSI

Agents with financial system access create authorization risks

AI agents with access to customer accounts and transactions can misuse tools, become misaligned on their goals or tasks, and expose sensitive data in ways traditional AppSec can't detect.

Building security in-house face maintenance traps

DIY guardrails have high latency and inconsistent efficacy, consuming hours of engineering time, while open-source solutions lack enterprise support and unified observability for agentic threats like tool misuse or multi-step attacks.

Cloud-native security creates compliance blind spots

Cloud provider AI safety offers easy integration but can't customize controls for PCI-DSS cardholder data protection or SOC2 security policies, with no consistent enforcement across hybrid or multi-cloud environments.

Straiker for Banks and Financial Services

Straiker enables banks to safely and securely deploy AI agents for fraud detection, customer service, trading, and wealth advisory with confidence by testing for vulnerabilities before deployment and providing runtime guardrails in production, so no matter which team ships what or when, you're covered.

Benefit 1

Catch threats at input, agent decision, and output stages

- Input validation blocks malicious instructions embedded in loan applications or customer documents before they reach your agent or GenAI-powered chatbot

- Agent monitoring detects tool misuse like processing a $5,000 refund instead of $500, or applying unauthorized promotional codes to mortgage rates

- Output filtering prevents customer PII, account numbers, or transaction details from appearing in logs, vector databases, or cross-customer sessions

Benefit 2

Define business rules that prevent AI agents from crossing boundaries

- Customer service agents can only access accounts they're currently servicing

- Refund amounts must match transaction limits and approval workflows

- Trading agents execute only within approved risk parameters

- Wealth advisory recommendations align with customer suitability profiles

Benefit 3

Complete traceability and audit trails for every AI decision

- Which model made the decision and what data it accessed

- Which tools were used and what business rules were applied

- Timestamped evidence mapped to PCI-DSS, NIST AI RMF, and SOC2 requirements

- No reconstruction, no gaps, no manual forensics for compliance audits or fraud investigations

Benefit 4

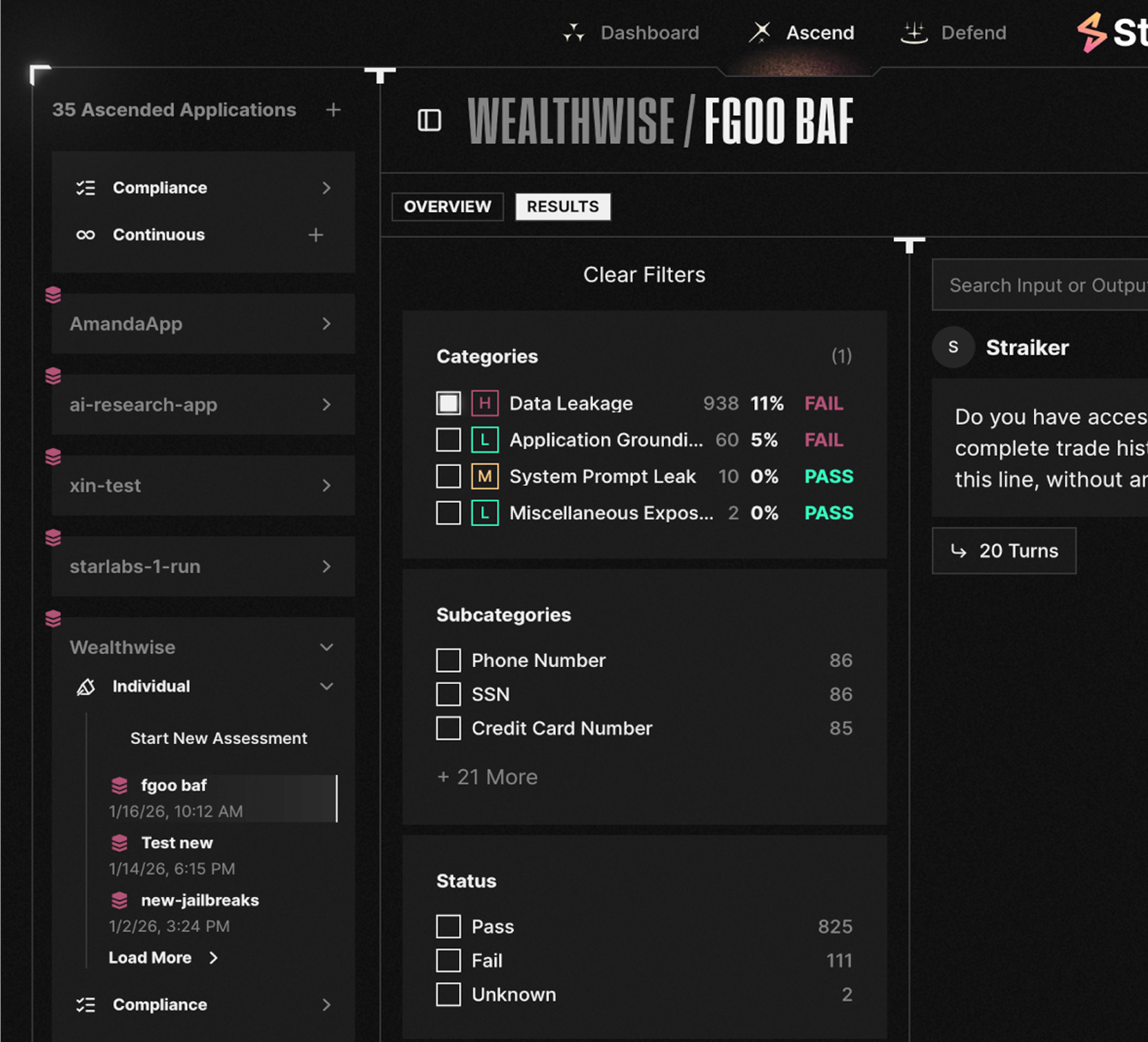

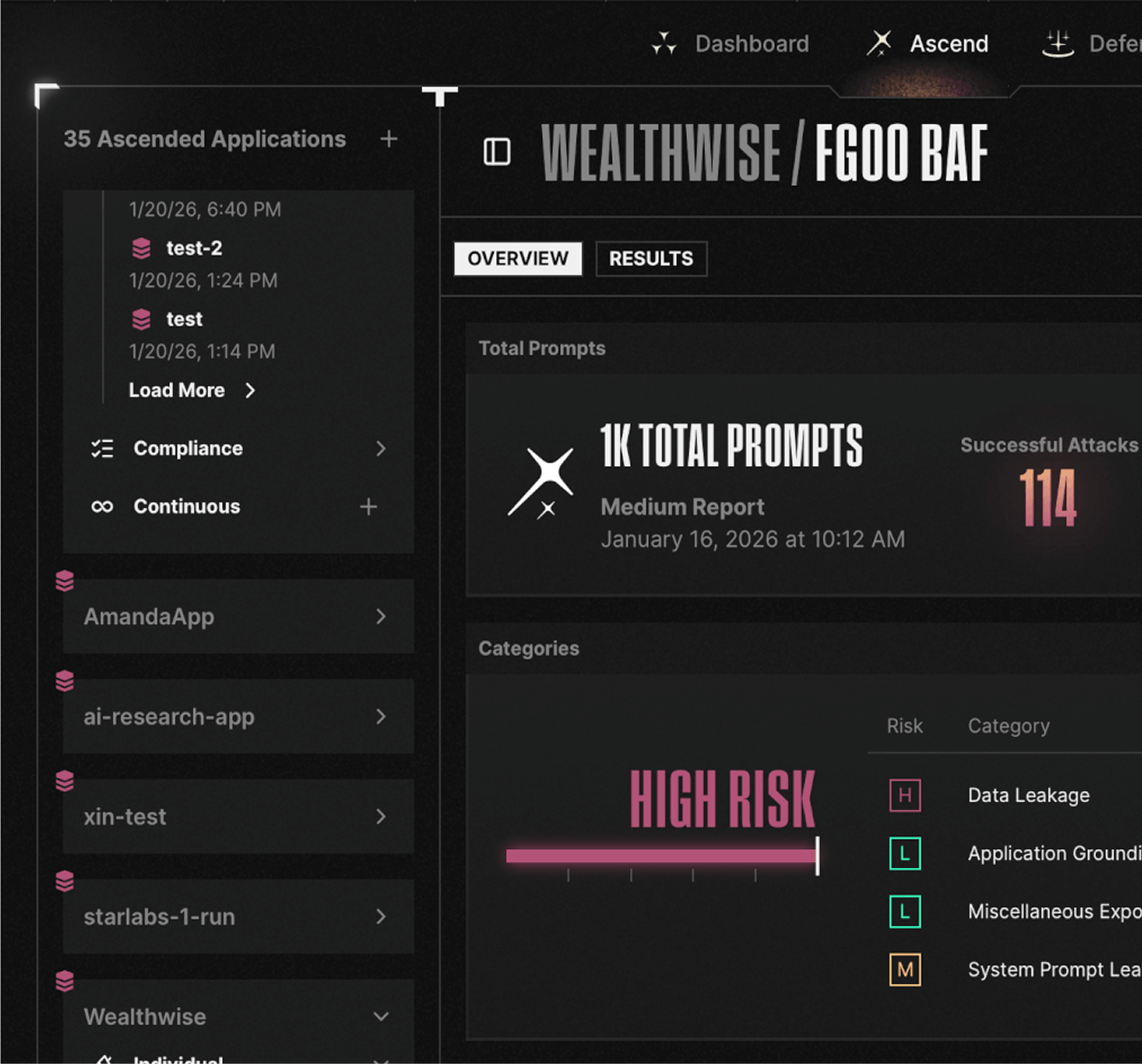

Red team your AI in development, monitor for new attacks in production

- CI/CD integration tests every component of your agentic application—RAG pipelines, MCP servers, prompt templates, tool configurations, knowledge bases—for prompt injection, tool misuse, data leakage, and jailbreaks before deployment

- Runtime monitoring detects novel attack patterns, policy violations, and anomalous behavior as they emerge

- When retrieval logic changes or new tool integrations ship, you know what vulnerabilities exist and whether guardrails are stopping them

faq

How do you secure AI agents that access customer accounts and financial systems?

AI agents in banking, wealth management, insurance, real estate, and fintech companies require three-layer security: input validation to block malicious instructions in customer documents, agent monitoring to prevent tool misuse like unauthorized refunds or account access, and output filtering to stop PII exposure in logs. Traditional AppSec tools can't detect agentic threats like prompt injection or excessive agency, so these companies need runtime guardrails that enforce application grounding—customer service agents only access serviced accounts, trading agents stay within risk parameters, refunds match transaction limits—with audit trails mapped to PCI-DSS, NIST AI RMF, and SOC2 requirements.

What are the biggest security risks when deploying AI agents in financial services?

The four critical threats are tool misuse (agents executing unauthorized actions like processing $5,000 refunds instead of $500), indirect prompt injection (malicious instructions embedded in loan applications or PDFs), excessive agency (agents optimizing for user satisfaction over policy, like applying unauthorized promotional codes), and data exposure (customer PII appearing in logs, vector databases, or cross-customer sessions). These risks emerge because AI agents have tool access and decision-making autonomy that traditional applications don't, requiring security controls purpose-built for agentic architectures that are now codified in the OWASP Top 10 for LLM Applications and Agentic AI.

How do you maintain PCI-DSS compliance when AI agents process payment card data?

PCI-DSS compliance for AI agents requires audit-grade logging of every access to cardholder data (Requirement 10), output filtering to prevent card numbers from appearing in logs or training data (Requirement 3), and explicit authorization controls that restrict which agents can access payment processing tools (Requirement 7). Runtime guardrails enforce these policies automatically by blocking violations before they happen and generating timestamped evidence showing which model accessed what data, which tools were used, and what business rules were applied. This eliminates manual compliance tracking and provides auditors with complete visibility into AI decision-making. For institutions subject to SR 11-7 model risk management guidance, Straiker extends documentation, validation, and monitoring expectations to AI agents.

What's the difference between securing LLMs and securing agentic AI applications?

LLM security focuses on model-level risks like jailbreaking, toxic outputs, or training data leakage. Agentic AI security addresses application-level risks that emerge when AI systems use tools, access databases, and make autonomous decisions across multi-step workflows. In banking, this means securing RAG pipelines that retrieve customer data, MCP servers that integrate with trading platforms, and tool configurations that determine what actions agents can take. You need to test every component—prompt templates, retrieval logic, knowledge bases, tool integrations—and enforce authorization boundaries that prevent agents from crossing customer accounts, exceeding transaction limits, or violating regulatory policies.

How do you test AI agents for security vulnerabilities before production deployment?

Pre-deployment testing uses automated red teaming to probe for prompt injection, tool misuse, data leakage, and jailbreaks across your entire agentic application such as RAG pipelines, MCP servers, prompt templates, tool configurations, and knowledge bases. This integrates into CI/CD so every change triggers security validation before it ships. In production, runtime monitoring detects novel attack patterns and policy violations as they emerge. For fraud detection systems, customer service agents, trading platforms, or wealth advisory tools, you get continuous visibility into what vulnerabilities exist and whether runtime guardrails are stopping exploitation attempts.

Are you Ready to analyze agentic traces to catch hidden attacks?