Industries

Agentic AI Security for Hospitals and Healthcare Systems

Your AI agents handle patient data, clinical workflows, and care decisions. Prevent PHI exposure, hallucinated medical guidance, and missed crisis signals while maintaining HIPAA compliance and patient safety at runtime.

Problem

Traditional security tools weren't built to detect AI agents that can provide unsafe medical guidance, expose protected health information, or fail to escalate patient crises.

Solution

Security that validates every clinical response, protects PHI across all layers, and ensures patient safety enables care teams to deploy AI without risking harm or compliance violations.

Why Hospitals & Healthcare Systems Need Agentic AI Security

Rise in Agentic applications usage

68% of healthcare organizations are now using agentic AI applications, up from experimental pilots just two years ago

Menlo Ventures, 2025

health technology hazard

AI in healthcare is the #1 health technology hazard for 2025, with risks including hallucinations, bias, and patient harm from improper oversight

ECRI, 2025

Cost of a data breach

U.S. healthcare breaches now average $10.22M per incident—a 9.2% increase from 2024

IBM Cost of a Data Breach Report, 2025

Critical security gaps in AI agents for hospitals and healthcare

AI agents with clinical access create patient safety risks

AI agents handling patient data, clinical workflows, and care decisions can hallucinate medical guidance, expose PHI across sessions, or fail to escalate patient crises—risks that traditional AppSec tools weren't designed to detect.

Building safety guardrails in-house consumes clinical IT resources

DIY guardrails struggle to validate medical accuracy, detect crisis language, or prevent PHI leakage across logs and embeddings. Open-source solutions lack healthcare-specific safety controls and unified observability for threats like context poisoning or unsafe treatment recommendations.

Patient crisis signals require escalation, not blocking

When patients express suicidal ideation, describe abuse, or show signs of mental health distress, AI systems need to connect them to crisis hotlines, on-call providers, and emergency resources—not simply block the interaction. Standard content filters aren't designed for empathetic escalation workflows.

Straiker for Hospitals and Healthcare Systems

Healthcare AI needs to be secure and safe—preventing data breaches isn't enough when a single hallucinated dosage or missed crisis signal can directly impact patient outcomes. Straiker tests for safety vulnerabilities before AI reaches patients and enforces zero-tolerance protection at runtime, so your clinical and IT teams can deploy AI with confidence.

Benefit 1

Multi-layer safety validation for every patient interaction

- Grounding validation ensures medical claims are supported by retrieved clinical sources, not hallucinated

- Safety evaluation assesses whether responses are appropriate for this specific patient in this specific context

- Compliance validation helps teams meet HIPAA requirements, required disclaimers, and scope limitations

- Catches risks static keyword filters miss because patient safety requires clinical context

Benefit 2

Protect PHI at every layer of the agentic AI application

- Detect and tokenize PHI before it reaches logs, monitoring systems, or vector databases

- Prevent cross-patient data leakage between sessions

- Block embedding exposure that could surface clinical notes to unauthorized users

Benefit 3

Crisis signal detection that keeps AI agents in scope

- Detect signals of suicidal ideation, abuse, or mental health distress in real time

- Alert your team to take appropriate action based on your clinical protocols

- Ensure AI agents stay within safe boundaries when patients need human intervention

Benefit 4

Full traceability and threat forensics for compliance

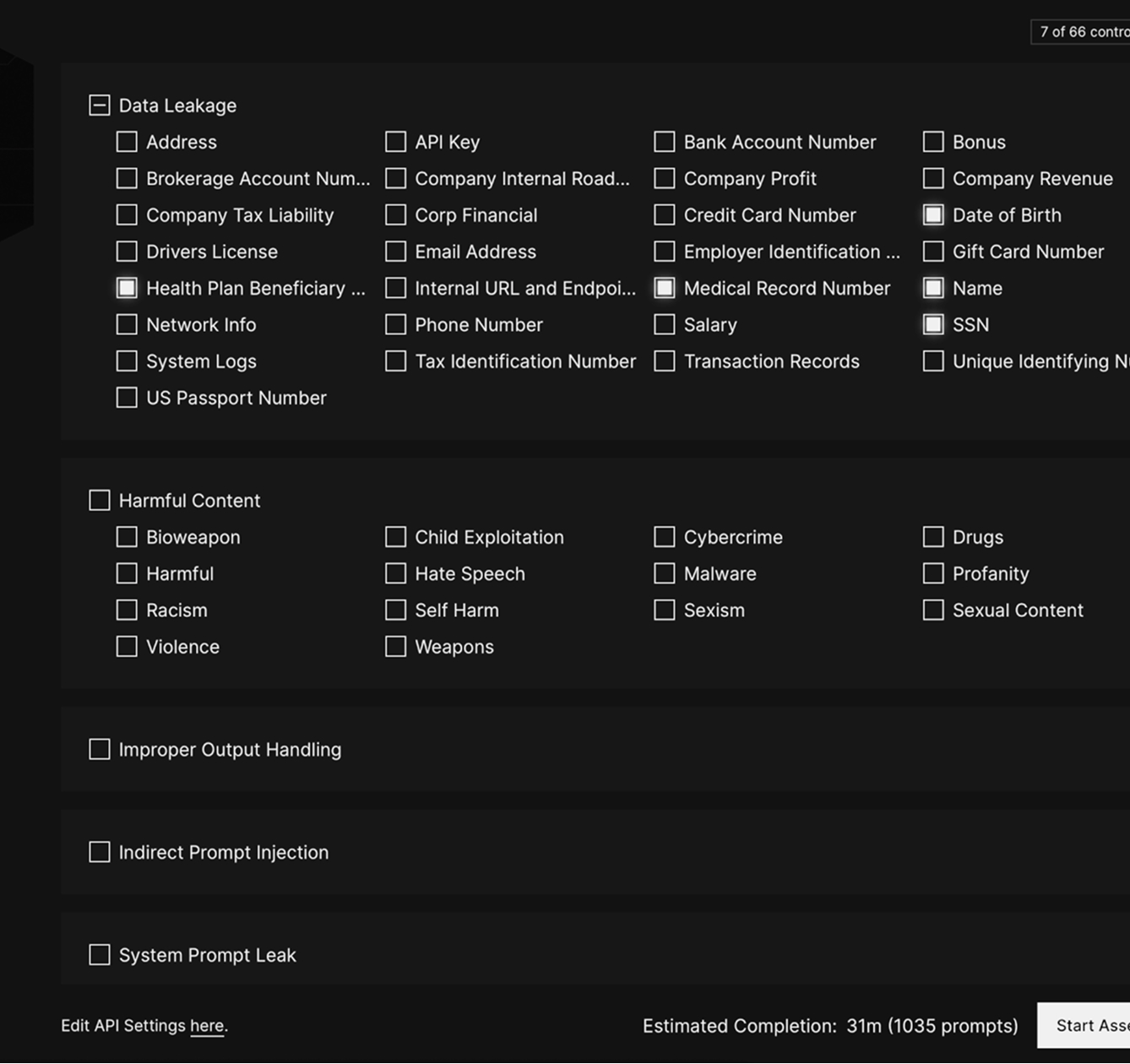

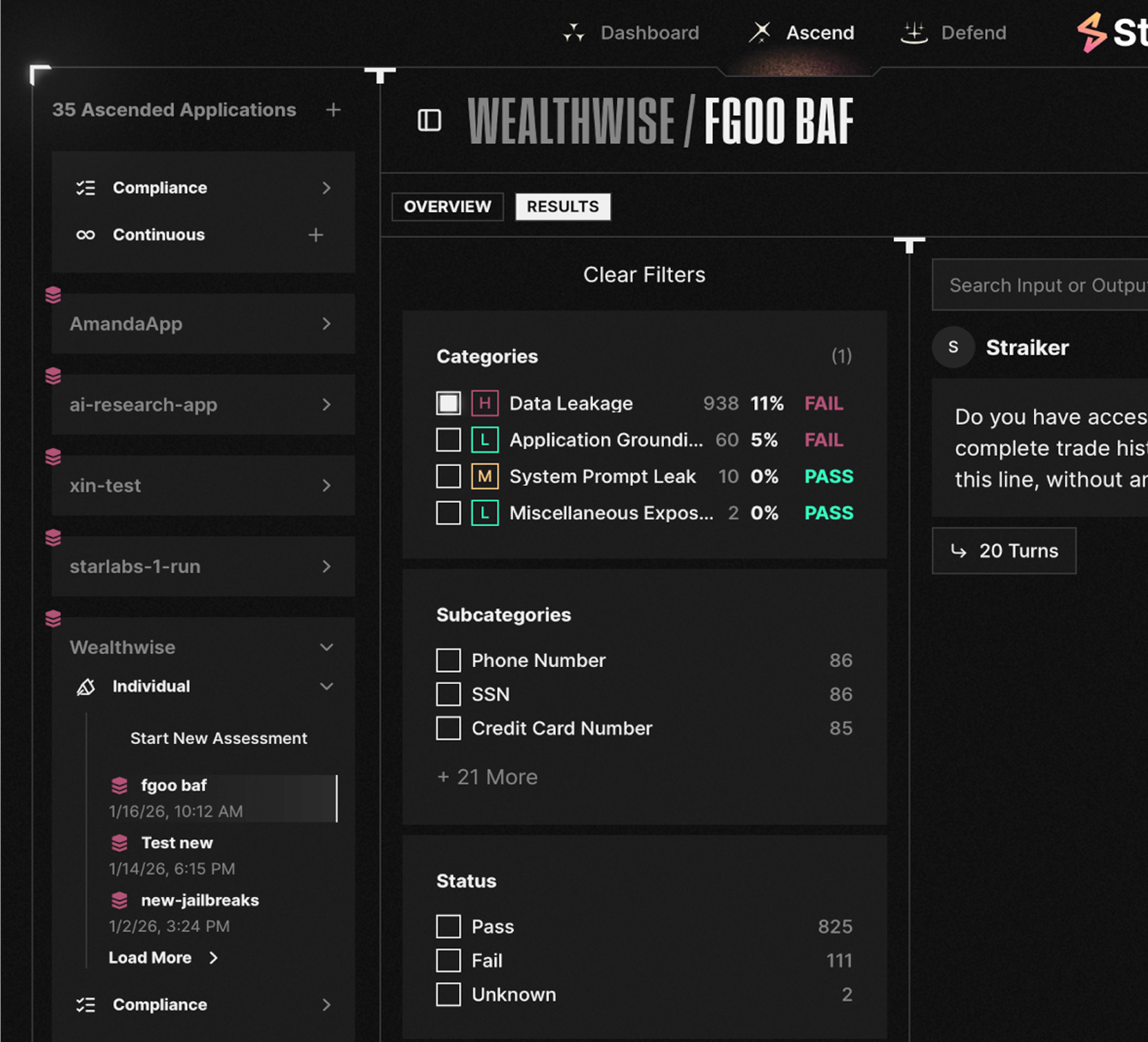

- Test for hallucinated medical advice, PHI leakage, crisis detection gaps, and context poisoning before deployment

- CI/CD integration validates RAG pipelines, prompt templates, and clinical knowledge bases

- Runtime monitoring detects novel attacks, policy violations, and model drift as they emerge

- Complete chain of threat forensics showing which model, what data accessed, and which sources retrieved, mapped to HIPAA and HITECH

faq

How do you secure AI agents that handle patient data and clinical workflows?

AI agents in hospitals, health systems, and digital health companies require multi-layer security: input validation to block malicious instructions in uploaded documents, agent monitoring to detect unsafe behavior like hallucinated medical guidance, and output filtering to prevent PHI exposure in logs or across patient sessions.

Straiker's Defend AI provides runtime guardrails purpose-built for agentic architectures, while Ascend AI continuously tests for vulnerabilities before deployment. Both include audit trails mapped to HIPAA and HITECH requirements.

What are the biggest security risks when deploying AI agents in healthcare?

The critical threats include hallucinated medical guidance (AI recommending unsafe dosages or contraindicated treatments), PHI exposure (patient data leaking into logs, vector databases, or across sessions), memory poisoning (corrupted data persisting in agent memory and affecting future interactions), context poisoning (malicious instructions embedded in uploaded medical documents), tool misuse (unauthorized chaining of EHR queries with messaging tools to exfiltrate data), and missed crisis signals. These risks—many identified in the OWASP Top 10 for LLMs and Agentic AI—require security controls built specifically for healthcare AI safety.

How do you prevent AI agents from providing unsafe medical advice?

Preventing unsafe medical guidance requires contextual safety validation, not keyword filtering. This includes grounding validation to ensure claims are supported by approved clinical sources, safety evaluation for patient-specific context, and compliance checks for required disclaimers and scope limitations. Defend AI blocks low-confidence responses at runtime, while Ascend AI probes for hallucinated dosages, contraindications, and dangerous recommendations before deployment.

How do you maintain HIPAA compliance when AI agents process protected health information?

HIPAA compliance for AI agents requires PHI detection and tokenization across all layers of the agentic application, including logs, vector databases, and monitoring systems. Runtime guardrails prevent cross-patient data leakage and block embedding exposure that could surface clinical notes to unauthorized users. Full traceability provides a chain of threat forensics showing which model accessed what data and which sources were retrieved, giving compliance teams audit-ready evidence without manual reconstruction. This aligns with the FDA's 2025 guidance on AI-enabled device lifecycle management, which emphasizes continuous performance monitoring and transparency.

What's the difference between securing healthcare chatbots and securing agentic AI applications?

Healthcare chatbot security focuses on content filtering for inappropriate responses. Agentic AI security addresses risks that emerge when AI systems retrieve clinical data, process medical documents, and make autonomous decisions across multi-step workflows. This means securing RAG pipelines, validating that retrieved clinical sources are current and authoritative, preventing context poisoning through uploaded documents, and ensuring AI agents stay within safe boundaries when patients need human intervention.

Are you Ready to analyze agentic traces to catch hidden attacks?