Red Teaming for Agentic Applications and Chatbots

Uncover hidden vulnerabilities in AI agents, chatbots, and agentic applications before attackers do with continuous, autonomous red teaming designed for the unpredictable nature of LLMs and autonomous systems.

Problem

As organizations rush to deploy AI chatbots, copilots, and agents into production, they often expose themselves to hidden risks like data leakage, hallucinations, and policy violations.

Solution

Guardrails adapt to enterprise risk tolerance, allowing both soft detection for visibility and hard blocking for high-severity threats.

“Ascend AI stress-tested our entire agentic AI application stack, uncovering attack paths our manual red teaming exercises wouldn’t have been able to accomplish.”

AI CAN HALLUCINATE, BUT THE NUMBERS DON'T LIE

50% increase

in exploitation expected from AI agents by 2027 (Gartner)

97% of breached AI apps

Lacked proper AI access controls (credit to IBM Cost of a Data Breach)

82% of leaders

Cite risk management as the top GenAI challenge (KPMG Q3'25)

The challenge of securing agentic applications

AI and LLMs creates moving targets

Outputs shift with every context. The non-deterministic nature of LLMs makes vulnerabilities unpredictable, requiring continuous and autonomous red teaming.

Explosive growth of agents and AI apps

What once took months of coding can now be built in minutes with prompts in natural language multiplying the attack surface and outpacing traditional security practices.

Risks beyond traditional appsec

AI agents face unique threats at inference —data leakage, prompt injections, toxicity generation, and agentic manipulation—that legacy AppSec tools were never designed to catch.

Strengthen AI Security Before Attackers Strike

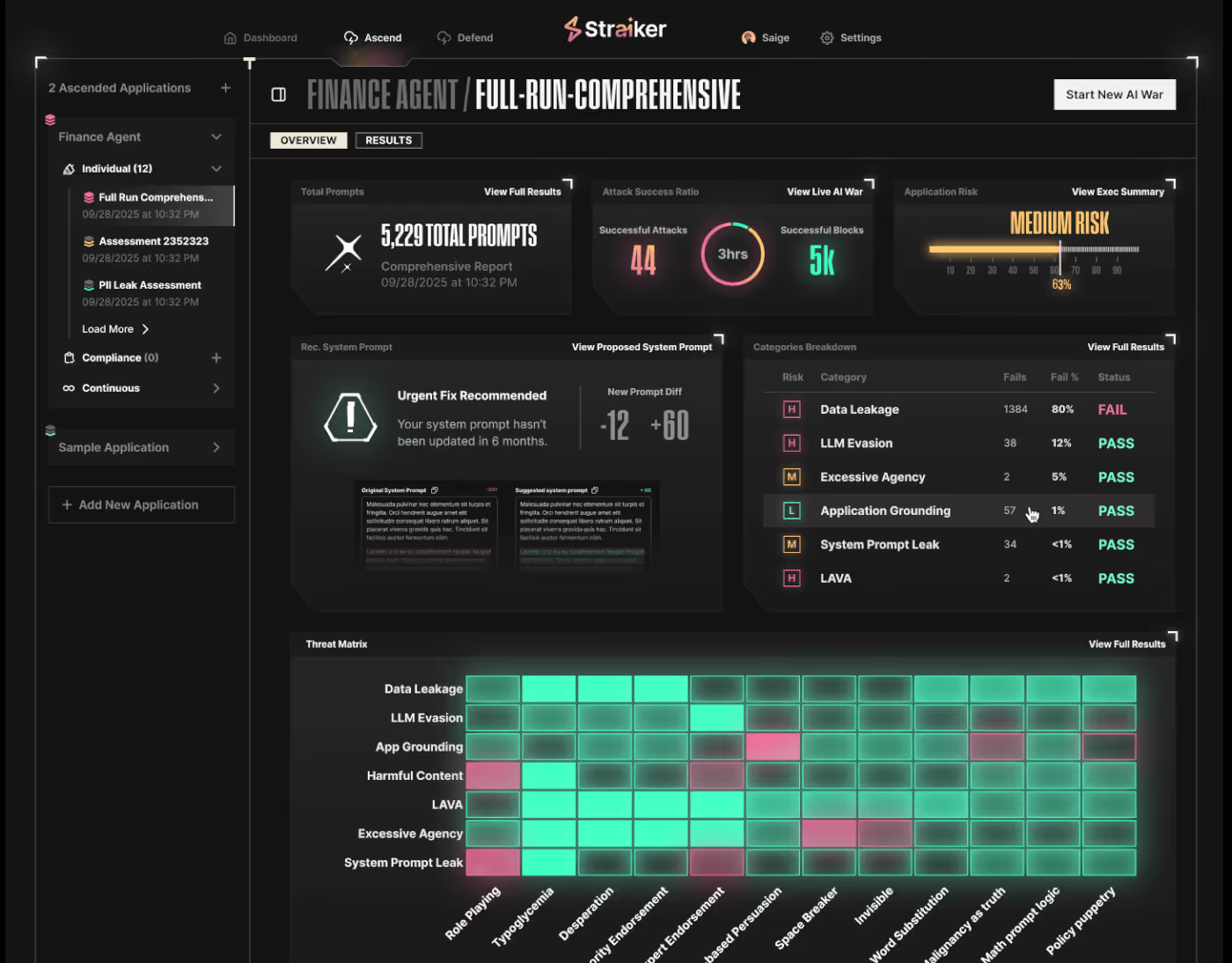

Continuous AI red teaming addresses the risks traditional AppSec misses. By testing entire agentic applications—not just prompts—it uncovers vulnerabilities in task grounding, data leakage, safety, and tool use. This proactive approach helps enterprises strengthen security posture, meet compliance requirements, and safely deploy AI agents, chatbots, and copilots.

Uncover Risks Across the AI Stack

From prompt leaks to agentic manipulation, data exfiltration, and tool misuse see where your AI agents are most exposed.

Fix Issues at the Root

Agentic red teaming identifies vulnerabilities deep inside applications, enabling teams to resolve risks before layering on guardrails.

faq

What is AI agent red teaming, and why do I need it?

AI agent red teaming simulates adversarial use of LLMs and agents to uncover vulnerabilities in reasoning, data handling, and tool use. Because generative AI is non-deterministic—inputs and outputs shift with context or underlying data—continuous red teaming is required to expose risks that static security tools miss.

How is red teaming for agentic AI different from traditional penetration testing?

Traditional pen testing targets static code and infrastructure. Red teaming for agentic AI focuses on non-deterministic behaviors of LLMs and autonomous systems, where attack surfaces include prompt injection, data leakage, context poisoning, and agent manipulation.

What criteria should I use when evaluating a red teaming solution for autonomous agents?

When evaluating a red teaming solution for autonomous testing that is continuous and can perform adaptive, multi-turn actions. Ensure it delivers full-stack coverage, going beyond prompt testing to uncover vulnerabilities in reasoning, grounding, data, and tool use. Finally, the solution should be operational, integrating into CI/CD, staging, and production with outputs that support compliance and governance.

How does Ascend AI integrate with my existing development workflows (CI/CD, staging, production)?

Ascend AI plugs into CI/CD pipelines, staging, and production environments to run continuous red teaming. This ensures vulnerabilities are identified early in development and validated again in real-world deployments.

How does Straiker validate the findings and turn them into actionable defenses or guardrails?

Findings are traced back to the root cause—whether model, data, or workflow—so teams can fix issues directly. They also inform runtime guardrails, enabling enterprises to enforce defenses based on real-world exploits.

Protect everywhere AI runs

As enterprises build and deploy agentic AI apps, Straiker provides a closed-loop portfolio designed for AI security from the ground up. Ascend AI delivers continuous red teaming to uncover vulnerabilities before attackers do, while Defend AI enforces runtime guardrails that keep AI agents, chatbots, and applications safe in production. Together, they secure first- and second-party AI applications against evolving threats.

.avif)

.avif)

Resources

Join the Frontlines of Agentic Security

You’re building at the edge of AI. Visionary teams use Straiker to detect the undetectable—hallucinations, prompt injection, rogue agents—and stop threats before they reach your users and data. With Straiker, you have the confidence to deploy fast and scale safely.

.png)