Industries

Agentic AI Security for High-Tech & AI-native Enterprises

Your engineering teams are building multi-agent applications, AI coding assistants, and AI-native products that push the frontier. Prevent developer tool compromise, orchestration failures, and model hijacking while maintaining the velocity your teams demand.

Problem

Traditional application security tools weren't built to detect AI agents that escalate privileges across multi-agent workflows, extract credentials from code context, or cascade failures across orchestration layers.

Solution

Security that monitors agent-to-agent communication, validates tool authorization, and tests for novel vulnerabilities enables teams to ship AI faster without sacrificing control.

Why High-Tech & AI-Native Companies Need Agentic AI Security

High-risk behaviour from AI agents

80% of organizations have encountered risky behaviors from AI agents, including improper data exposure and unauthorized system access

McKinsey, 2025

Low knowledge of AI usage

83% of enterprises use AI in daily operations, but only 13% have strong visibility into how it's being used

Cyera, 2025

Very low AI security strategy

Only 6% of organizations have an advanced AI security strategy, despite 40% of enterprise applications expected to feature AI agents by 2026

Gartner, 2025

Critical security gaps in AI agents for high-tech companies

AI coding assistants have privileged access to your environment

Developer tools can read .env files, query sensitive data, and log entire exchanges. Beyond sanctioned tools, shadow AI is everywhere. Teams spin up agents without security review until something breaks.

Multi-agent systems create emergent risks nobody predicted

When multiple agents work together, you get infinite resource loops, cascade failures across orchestration layers, and privilege escalation when low-privilege agents request data from high-privilege agents. Each agent does its job correctly, but the system fails as a whole.

Balancing developer velocity with security creates friction

Developers want unrestricted AI assistance. Security teams need visibility and control. One-size-fits-all policies get routed around, and blanket bans kill productivity. Without tiered access and fast, transparent guardrails, security becomes the bottleneck.

Straiker for High-Tech & AI-Native Companies

Straiker enables high-tech teams to ship AI faster without sacrificing control. We test for novel vulnerabilities before they reach production and enforce runtime controls that stay invisible when working and clear when blocking. This gives your engineers the velocity they need while security maintains visibility across multi-agent architectures, AI coding assistants, and rapid deployment cycles.

Benefit 1

Secure developer tools without killing productivity

- Credential and PII scanning across code context, prompts, and AI-generated outputs

- Tiered access controls that match permissions to developer role and repository sensitivity

- Fast, transparent guardrails under 100ms with clear explanations when actions are blocked

- Developers stay in flow while security maintains visibility

Benefit 2

Monitor and protect multi-agent orchestration

- Real-time visibility into agent-to-agent communication and dependencies

- Detect privilege escalation when low-privilege agents request data from high-privilege agents

- Tool authorization with parameter validation to block unauthorized actions

- Loop detection and circuit breakers to prevent resource exhaustion and cascade failures

Benefit 3

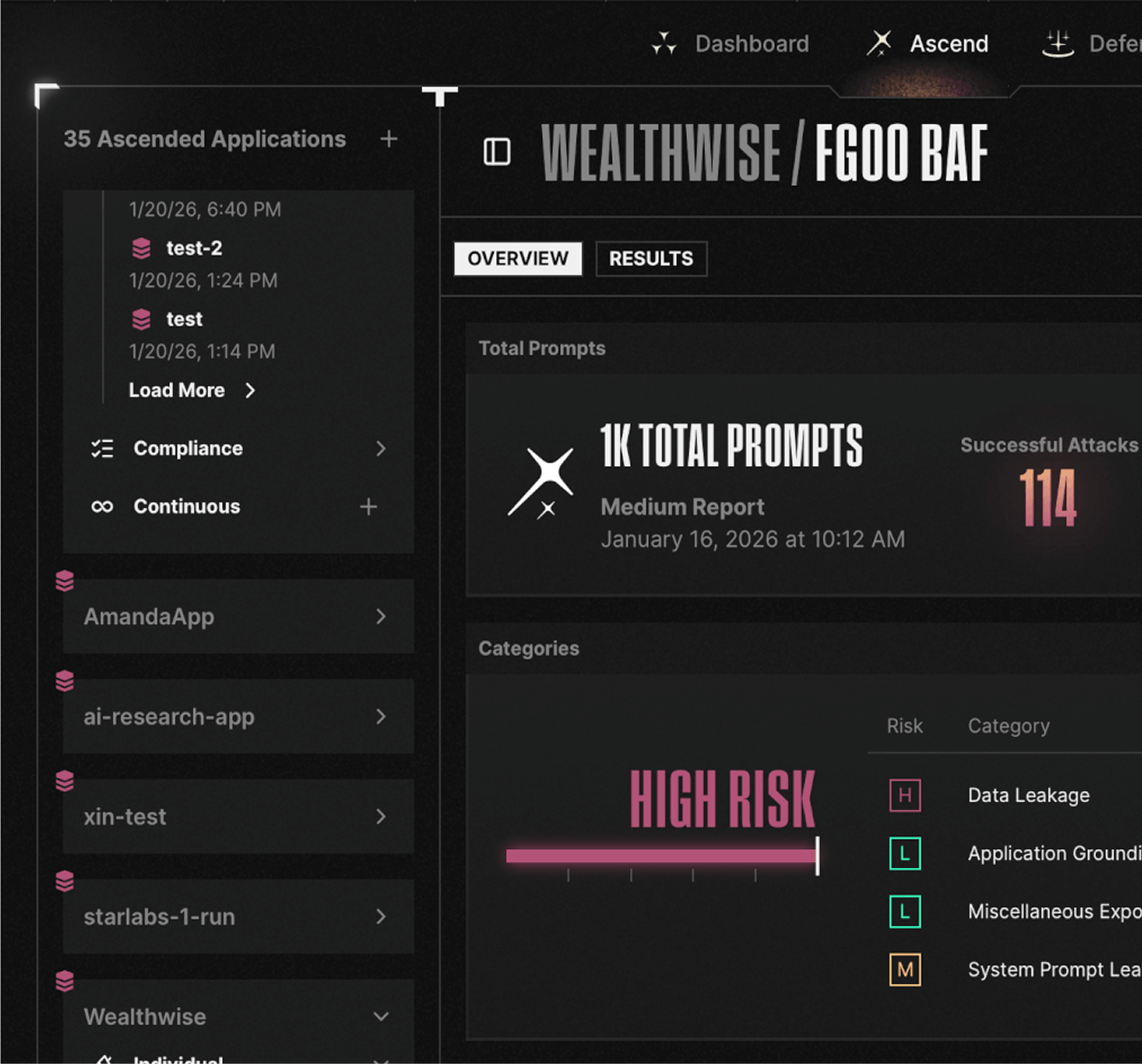

Continuous adversarial testing across the development lifecycle

- Test for developer tool compromise, prompt injection through code, and credential extraction

- Probe multi-agent exploitation scenarios including privilege escalation and consensus manipulation

- Validate training data integrity to detect poisoning and backdoor injection

- Catch model update regressions when provider changes introduce new vulnerabilities

- CI/CD integration, runtime testing, and local development feedback at every stage

faq

How do you secure AI coding assistants and developer tools?

AI coding assistants have privileged access to code context, credentials, and deployment pipelines. Straiker's Defend AI provides runtime guardrails including credential and PII scanning, tiered access controls based on developer role, and fast transparent blocking under 100ms. Ascend AI tests for prompt injection through code comments, credential extraction, and other developer tool compromise scenarios before they reach production.

What are the biggest security risks with multi-agent AI systems?

Multi-agent systems create emergent risks: infinite resource loops when agents retry failed requests, cascade failures across orchestration layers, and privilege escalation when low-privilege agents request data from high-privilege agents. These risks require runtime monitoring of agent-to-agent communication, explicit policy enforcement, and circuit breakers that traditional application security tools can't provide. Infrastructure protocols like MCP (Model Context Protocol) and A2A (Agent2Agent) are evolving faster than their security models, creating new attack surfaces. These risks—along with memory poisoning and model update regression—are now codified in the OWASP Top 10 for Agentic Applications.

How do you prevent AI agents from misusing APIs and tools?

Defend AI validates tool authorization and parameters before execution, blocking unauthorized actions like unintended financial communications or mass data retrieval. Approval workflows require human oversight for high-impact actions, and real-time monitoring detects when agents misinterpret prompts into operations outside their intended scope.

How do you balance developer velocity with AI security controls?

Security that creates friction gets routed around. Straiker provides tiered access that matches permissions to developer role and repository sensitivity, with guardrails that stay invisible when working and clear when blocking. The goal is low security friction while maintaining zero credential exposures.

How do you test multi-agent applications for security vulnerabilities?

Ascend AI uses adversarial testing across the full development lifecycle: developer tool compromise scenarios, multi-agent exploitation including privilege escalation and consensus manipulation, orchestration attacks, and training data poisoning detection. We test at runtime, in CI/CD, and during local development so research teams, internal tool teams, and product teams all get coverage at the stage that matters to them.

Are you Ready to analyze agentic traces to catch hidden attacks?