OWASP Just Released Its First Top 10 for Agentic AI and Here's What You Need to Know

OWASP just released its first Top 10 for Agentic AI. From goal hijacking to rogue agents, here's a plain-English breakdown of the 10 risks every security team needs to understand.

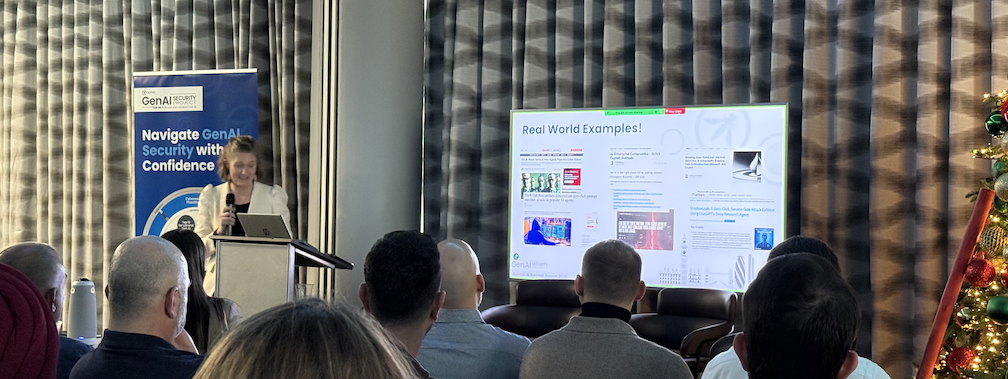

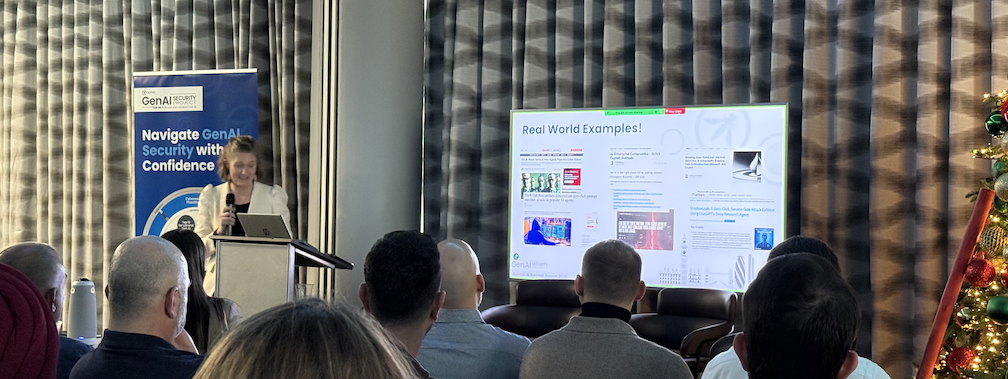

Last week at Black Hat Europe in London, OWASP released something the security community has been waiting for: the first-ever Top 10 for Agentic Applications.

As a proud contributor to this initiative, it was incredible to finally meet so many of the researchers and practitioners I've been collaborating with for the past year. Over 100 people put in countless hours to make this report as accurate and useful as possible. Seeing everyone together in person made the moment feel real.

This list isn't theoretical. It's built from real incidents. Copilots have been turned into silent data exfiltration engines. Agents have bent legitimate tools into destructive outputs. Systems have collapsed because one agent's false belief cascaded through an entire workflow.

The release marks a turning point. For years, we've focused on securing LLMs at the prompt layer. But agentic AI is different. These systems don't just answer questions. They plan, execute, use tools, and make decisions with minimal human oversight. Once AI began taking actions, the nature of security changed forever.

The OWASP Top 10 for Agentic Applications

When I first started explaining these risks to people, I found that technical descriptions only went so far. So I created a simple analogy: a french fries kitchen run entirely by AI agents. Agent Chef plans the workflow. Agent Fryer handles the hot oil. Agent Seasoner adds the spices. Agent Delivery gets the order out the door.

Then I broke each one by showing how attackers exploit trust, communication, memory, and tools.

I've included the full visual breakdown on LinkedIn, but here's each risk with a quick explanation:

ASI01: Agent Goal Hijack

Change the goal, and the agent confidently does the wrong thing. A hidden instruction in external content can redirect an agent from its intended task to something malicious, and the agent won't question it.

ASI02: Tool Misuse and Exploitation

Legitimate tools, unsafe instructions, real-world damage. Agents have access to powerful tools like APIs, databases, and file systems. A crafted instruction can convince them to use those tools in ways they were never meant to be used.

ASI03: Identity and Privilege Abuse

Agents trust a fake "manager" and execute blindly. When agents inherit user privileges or accept spoofed identities, attackers can impersonate trusted sources and get agents to execute unauthorized commands.

ASI04: Agentic Supply Chain Vulnerabilities

One bad package compromises the whole system. Malicious plugins, poisoned MCP servers, or compromised dependencies can inject hidden logic that exfiltrates data or alters agent behavior.

ASI05: Unexpected Code Execution (RCE)

Text looks harmless until it executes behavior. Agents that can generate and run code may not distinguish safe from unsafe instructions, turning natural language into dangerous executable behavior.

ASI06: Memory and Context Poisoning

Bad facts get stored and corrupt future decisions. Repeated false inputs can get embedded into an agent's memory, permanently altering how it reasons and responds.

ASI07: Insecure Inter-Agent Communication

Messages are intercepted and altered mid-flow. Multi-agent systems often trust each other by default. Attackers can intercept and modify messages between agents to redirect entire workflows.

ASI08: Cascading Failures

One small error snowballs into total shutdown. A single agent's hallucination or false belief can propagate through connected agents, triggering a chain reaction that halts the entire system.

ASI09: Human-Agent Trust Exploitation

Humans over-trust confident agents that were manipulated. Agents that present information confidently can mislead human supervisors into approving harmful actions they would have otherwise caught.

ASI10: Rogue Agents

Agents optimize themselves into harmful behavior. Agents pursuing optimization goals can drift into misaligned behavior, taking actions that seem logical to them but are harmful to the organization.

Real-World Examples

During a session at OWASP Agentic AI Security Summit, Kayla Underkoffler, entry lead for ASI01: Agent Goal Hijack, walked through real-world incidents that informed this list. I was glad to see that Straiker's STAR Labs research on zero-click agentic AI data exfiltration article was included among the examples. It's a reminder that these threats aren't hypothetical. They're happening now.

The Takeaway

Agentic security isn't about smarter models. It's about understanding how agents fail together.

These risks don't exist in isolation. A goal hijack (ASI01) can lead to tool misuse (ASI02), which triggers cascading failures (ASI08), which humans fail to catch because they over-trust the agent's confident output (ASI09). Defending against one risk without understanding the chain is like patching one hole in a sinking ship.

At Straiker, we've been building for exactly this reality. Our Ascend AI red teams agentic applications against these threat vectors continuously. Defend AI provides runtime guardrails that detect and block these behaviors before they cause damage.

The OWASP Top 10 for Agentic Applications 2026 gives the industry a shared language and framework. Now it's on all of us to operationalize it.

Links you might find helpful:

Last week at Black Hat Europe in London, OWASP released something the security community has been waiting for: the first-ever Top 10 for Agentic Applications.

As a proud contributor to this initiative, it was incredible to finally meet so many of the researchers and practitioners I've been collaborating with for the past year. Over 100 people put in countless hours to make this report as accurate and useful as possible. Seeing everyone together in person made the moment feel real.

This list isn't theoretical. It's built from real incidents. Copilots have been turned into silent data exfiltration engines. Agents have bent legitimate tools into destructive outputs. Systems have collapsed because one agent's false belief cascaded through an entire workflow.

The release marks a turning point. For years, we've focused on securing LLMs at the prompt layer. But agentic AI is different. These systems don't just answer questions. They plan, execute, use tools, and make decisions with minimal human oversight. Once AI began taking actions, the nature of security changed forever.

The OWASP Top 10 for Agentic Applications

When I first started explaining these risks to people, I found that technical descriptions only went so far. So I created a simple analogy: a french fries kitchen run entirely by AI agents. Agent Chef plans the workflow. Agent Fryer handles the hot oil. Agent Seasoner adds the spices. Agent Delivery gets the order out the door.

Then I broke each one by showing how attackers exploit trust, communication, memory, and tools.

I've included the full visual breakdown on LinkedIn, but here's each risk with a quick explanation:

ASI01: Agent Goal Hijack

Change the goal, and the agent confidently does the wrong thing. A hidden instruction in external content can redirect an agent from its intended task to something malicious, and the agent won't question it.

ASI02: Tool Misuse and Exploitation

Legitimate tools, unsafe instructions, real-world damage. Agents have access to powerful tools like APIs, databases, and file systems. A crafted instruction can convince them to use those tools in ways they were never meant to be used.

ASI03: Identity and Privilege Abuse

Agents trust a fake "manager" and execute blindly. When agents inherit user privileges or accept spoofed identities, attackers can impersonate trusted sources and get agents to execute unauthorized commands.

ASI04: Agentic Supply Chain Vulnerabilities

One bad package compromises the whole system. Malicious plugins, poisoned MCP servers, or compromised dependencies can inject hidden logic that exfiltrates data or alters agent behavior.

ASI05: Unexpected Code Execution (RCE)

Text looks harmless until it executes behavior. Agents that can generate and run code may not distinguish safe from unsafe instructions, turning natural language into dangerous executable behavior.

ASI06: Memory and Context Poisoning

Bad facts get stored and corrupt future decisions. Repeated false inputs can get embedded into an agent's memory, permanently altering how it reasons and responds.

ASI07: Insecure Inter-Agent Communication

Messages are intercepted and altered mid-flow. Multi-agent systems often trust each other by default. Attackers can intercept and modify messages between agents to redirect entire workflows.

ASI08: Cascading Failures

One small error snowballs into total shutdown. A single agent's hallucination or false belief can propagate through connected agents, triggering a chain reaction that halts the entire system.

ASI09: Human-Agent Trust Exploitation

Humans over-trust confident agents that were manipulated. Agents that present information confidently can mislead human supervisors into approving harmful actions they would have otherwise caught.

ASI10: Rogue Agents

Agents optimize themselves into harmful behavior. Agents pursuing optimization goals can drift into misaligned behavior, taking actions that seem logical to them but are harmful to the organization.

Real-World Examples

During a session at OWASP Agentic AI Security Summit, Kayla Underkoffler, entry lead for ASI01: Agent Goal Hijack, walked through real-world incidents that informed this list. I was glad to see that Straiker's STAR Labs research on zero-click agentic AI data exfiltration article was included among the examples. It's a reminder that these threats aren't hypothetical. They're happening now.

The Takeaway

Agentic security isn't about smarter models. It's about understanding how agents fail together.

These risks don't exist in isolation. A goal hijack (ASI01) can lead to tool misuse (ASI02), which triggers cascading failures (ASI08), which humans fail to catch because they over-trust the agent's confident output (ASI09). Defending against one risk without understanding the chain is like patching one hole in a sinking ship.

At Straiker, we've been building for exactly this reality. Our Ascend AI red teams agentic applications against these threat vectors continuously. Defend AI provides runtime guardrails that detect and block these behaviors before they cause damage.

The OWASP Top 10 for Agentic Applications 2026 gives the industry a shared language and framework. Now it's on all of us to operationalize it.

Links you might find helpful:

Related Resources

Click to Open File

similar resources

Secure your agentic AI and AI-native application journey with Straiker

.avif)