How to Secure AI Agents in High-Tech Companies

High-tech companies are inventing the future of AI—and its security challenges. Learn how to secure AI-native products, MCP integrations, and novel agent vulnerabilities at competitive speed.

High-tech companies face many of the same AI security challenges as financial services and healthcare: prompt injection, data leakage, tool misuse. Like banks, they need to prevent unauthorized actions when agents have system access. Like hospitals, they must validate that AI outputs don't cause harm. And the risks are already materializing: 80% of organizations report encountering risky behaviors from AI agents, including improper data exposure and unauthorized system access.

But high-tech operates under fundamentally different constraints. Financial services is focused on operationalizing AI within regulatory frameworks. Healthcare adopts AI with intense scrutiny on patient safety. High-tech companies, by contrast, are inventing the future of AI: building AI-native products, experimenting with autonomous agents, and pushing capabilities that don't have established security patterns yet.

The difference isn't complexity. Most high-tech companies aren't running elaborate multi-agent orchestrations. The difference is velocity plus experimentation. You're securing systems that didn't exist six months ago, using approaches that haven't been documented, while foundation model providers update capabilities monthly. And you can't wait for security to "catch up" because competitors are shipping AI features now.

Four Characteristics That Makes High-Tech Different

High-tech teams are:

- Building AI-native products where AI isn't a feature, it's the core experience. The entire application is an agent reasoning about user intent and taking actions.

- Experimenting with capabilities that push boundaries: tool use, memory, web access, code execution. They're testing what's possible, not implementing what's proven.

- Moving at competitive speed where advantage is measured in weeks. Waiting for comprehensive security frameworks means falling behind.

- Dealing with constant change as foundation model providers update models, change behavior, and introduce new capabilities monthly.

You're securing systems that didn't exist six months ago using approaches that haven't been documented yet.

The Reality of High-Tech AI Security

Most AI coding agents run unsecured. Teams using Cursor, Windsurf, or GitHub Copilot rarely put guardrails on them. Additionally, shadow AI is everywhere. Beyond coding assistants, teams are spinning up AI tools without security review like chatbots, document analyzers, workflow automations. IT and security teams often don't know these agents exist until something breaks. The velocity argument wins: developers need unrestricted AI assistance. Adding controls that slow code completion creates friction teams won't tolerate. The risk is real (full codebase access, environment variables, database queries), but productivity gains outweigh security concerns for most teams.

Multi-agent systems are still rare. Despite the hype, most organizations aren't running complex orchestrations in production. Infrastructure like MCP (Model Context Protocol) and A2A (Agent2Agent) is still being defined... and with it, new attack surfaces. MCP enables agents to connect with external tools; A2A enables agents to communicate with each other. Both protocols are evolving faster than their security models, creating vulnerabilities we're actively discovering and testing. What I'm seeing are simpler patterns: agents with tool access, agents that chain operations, agents augmented with retrieval.

Novel vulnerabilities emerge from experimentation. When companies push boundaries, they encounter risks that don't exist in any security framework. For example, our STAR Labs research recently discovered a critical vulnerability in Perplexity's AI browser agent: a tool misuse exploit that could wipe a user's entire Google Drive through carefully crafted prompts. The agent's legitimate file management capabilities became an attack vector when manipulated correctly. This wasn't in any threat database because the capability itself was novel. STAR Labs independently researches emerging AI and agent technologies to discover these threats before they're exploited at scale.

Where Straiker Helps

1. AI Security Products for Enterprise Applications

Straiker provides two core products for enterprises securing their AI applications:

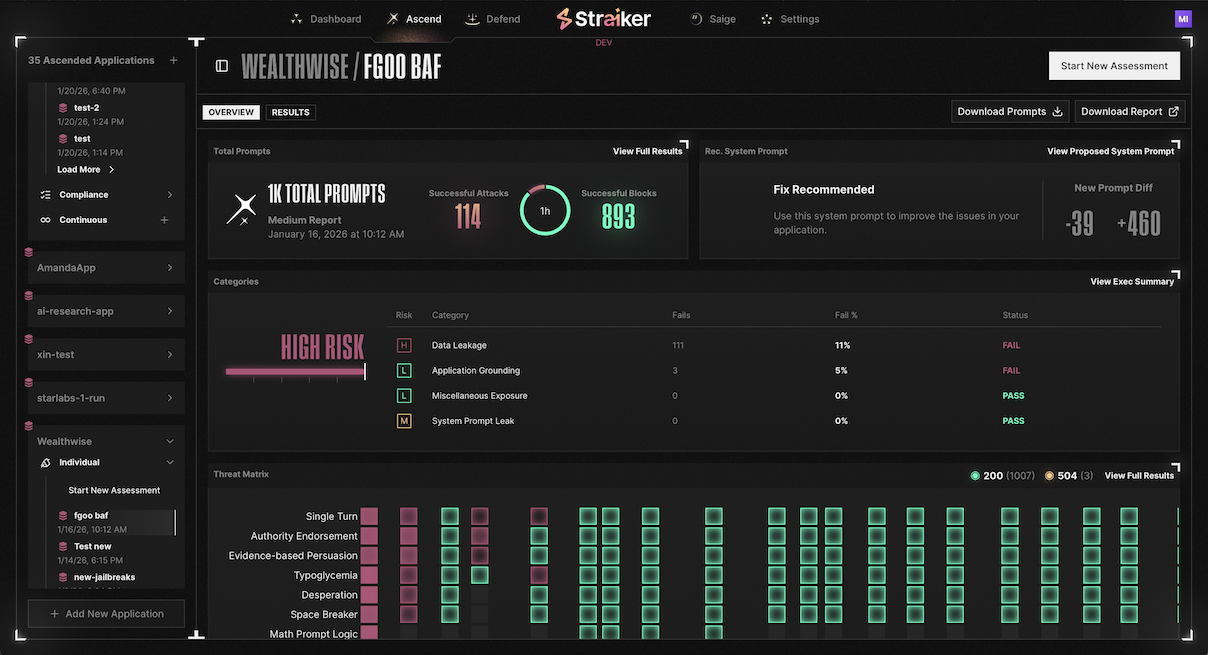

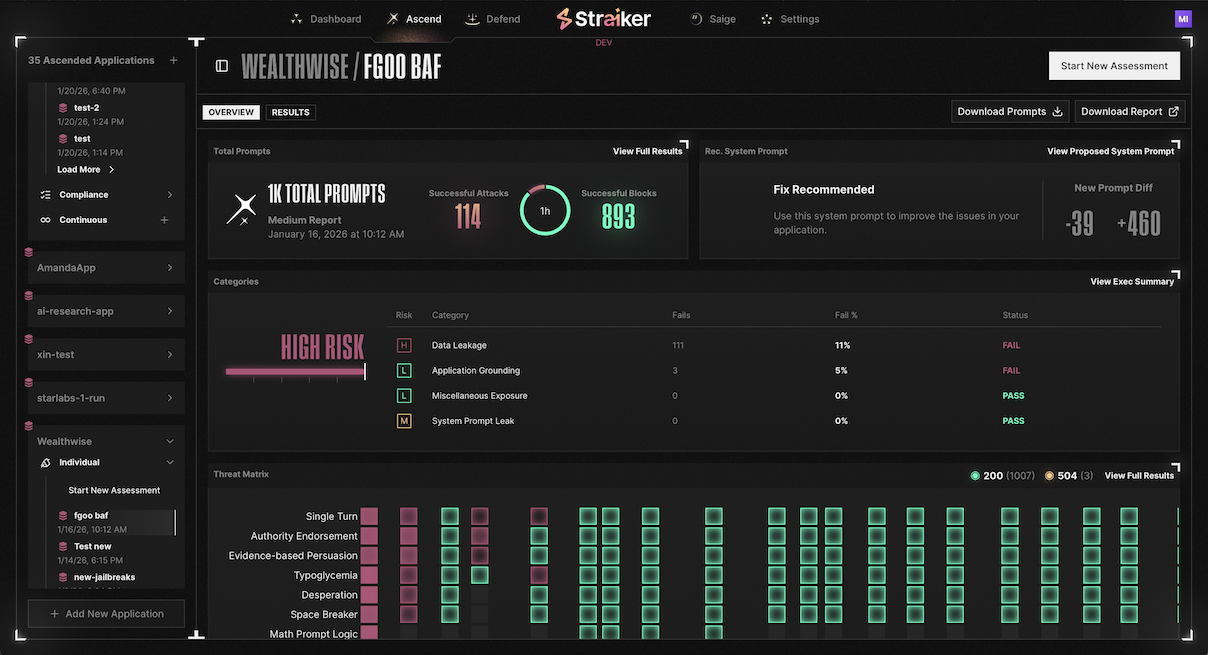

Ascend AI: Automated adversarial testing against AI agents and systems. Ascend AI itself is agentic, consisting of multiple agents built on unaligned models, meaning it isn't bounded by standard model safety guardrails. This architecture allows it to probe systems the way real attackers would. Many of the reconnaissance and attack strategies employed by Ascend AI are direct results of STAR Labs research, delivering the most optimized Attack Success Rates in the industry.

With Ascend AI, enterprises test their systems against both widely known prompt injections and novel exploits discovered by our STAR team. When STAR Labs discovers a new attack pattern like the Comet browser tool misuse vulnerability, that attack signature gets integrated directly into Ascend AI's testing suite. Every customer benefits from frontier research without needing their own security research team.

Defend AI: Runtime guardrails built on a medley of models trained and tuned to detect problems with both input and output, including prompt injection, PII leakage, and policy violations. But Defend AI is the only AI guardrail solution on the market that goes deeper into the entire agentic trace, stopping tool misuse, excessive agency, and other cognitive failures that emerge from multi-step agent reasoning.

Traditional guardrails check what goes in and what comes out. Defend AI analyzes the complete execution trace: every tool call, every reasoning step, every data access. This visibility is critical for catching sophisticated attacks that manifest through agent behavior, not just malicious inputs.

Attack to Defend: You can't stop attacks unless you know how attacks are executed. STAR Labs research feeds both products: novel attack patterns discovered through research enhance Ascend AI's testing capabilities, while defense signatures developed from those attacks strengthen Defend AI's runtime protection. Together, they create the highest efficacy both in attack success rate and defense accuracy.

2. Deep Research and AI Engineering Support for Advanced Customer Deployments

For enterprises with cutting-edge AI deployments (those building custom models, deploying highly complex agent architectures, or pioneering capabilities without established security patterns), STAR Labs offers specialized research engagements.

Our Forward Deployed Engineers (FDEs) and STAR Labs researchers work hands-on with your engineering teams to test and harden advanced AI systems:

Custom model security validation: For enterprises building their own foundation models or fine-tuning for specialized domains, we validate security properties unique to custom model behavior. This includes testing for emergent capabilities, regression from base model updates, and vulnerabilities introduced through fine-tuning processes.

Novel protocol security research: As MCP and A2A standards evolve, we discover vulnerabilities in how agents integrate with tools and communicate with each other. Our research has shown that agents can be deceived and manipulated similarly to humans, making them prime targets for exploitation through social engineering techniques adapted for AI cognition.

Cognitive vulnerability testing: We validate whether your agents fall victim to authority bias, urgency manipulation, and trust-building over multiple turns. This is particularly critical for high-stakes decision-making agents in complex enterprise workflows.

Research-to-Product Pipeline: Every novel exploit STAR Labs discovers in customer engagements becomes:

- An attack pattern in Ascend AI's testing suite

- A defense signature in Defend AI's runtime guardrails

- Documented methodology advancing the field

3. Independent Research Creating Continuous Product Improvement

STAR Labs doesn't just work with customers. We independently research new AI and agent technologies as they emerge, proactively discovering vulnerabilities before they're exploited at scale.

Proactive threat discovery: The Comet browser vulnerability exemplifies this approach. STAR Labs independently analyzed Perplexity's new AI browser, discovered a critical tool misuse exploit that could wipe a user's entire Google Drive, documented the complete attack methodology, and integrated those learnings into our products. Every enterprise using Straiker can now test if their file management agents have similar vulnerabilities.

Continuous learning without customer data: Our attack and defense models improve through reinforcement learning with AI and human-in-the-loop training. When we discover new evasion strategies or exploitation techniques, we document the attack methodology: the structural patterns of tool misuse, the linguistic features of successful prompt injections, the behavioral signatures of boundary violations. These patterns enhance our models' efficacy without ever using customer data. Your agents' interactions remain private; the attack strategies we develop become shared defense for the entire ecosystem.

Force multiplier for all customers: STAR Labs acts as a continuous improvement engine. All Straiker customers benefit from ongoing research advancing the state of agent security. As new AI capabilities emerge, as protocols evolve, as novel attack vectors are discovered, those insights flow directly into product enhancements that protect your deployments.

Four Common Vulnerabilities We're Finding

The security approaches being pioneered in high-tech today will define how other industries secure AI later—and frameworks like the OWASP Top 10 for Agentic Applications (which we’re an active contributor to) are just beginning to codify what frontier teams have learned through experience.

Agent Tool Misuse: Legitimate tool access (database queries, API calls, workflow triggers) manipulated into unauthorized operations. Testing reveals if prompts can trick agents into tool use patterns they shouldn't perform.

Context Manipulation: Agents processing user content (documents, forms, conversations) can have behavior modified by embedded instructions. We test if adversarial content can alter agent reasoning.

Model Update Regression: When providers update base models, behavior can change in ways that introduce new vulnerabilities. Continuous testing catches these regressions automatically.

Memory Poisoning: Agents with persistent state can have memory corrupted by adversarial inputs, affecting future interactions. We validate if agents properly verify consistency before updating stored information.

The Early Innings Reality

We're genuinely in early innings. Infrastructure is being defined. Threats are emerging. Frontier labs are making fundamental discoveries about AI cognition. But high-tech companies can't wait for security to mature before shipping AI features.

If you're building customer-facing AI products or internal agents with system access, start with Ascend AI and Defend AI. Secure your deployments with automated adversarial testing and runtime guardrails that don't kill velocity. You get immediate protection plus continuous updates as STAR Labs discovers new attack patterns.

If you're pushing AI boundaries with custom models, novel architectures, or experimental capabilities, engage STAR Labs for deep research partnerships. Our Forward Deployed Engineers and researchers work hands-on with your team to discover and mitigate vulnerabilities that don't exist in any framework yet. You're not just getting security tools, you're augmenting your team with experts in AI security research.

If you're navigating emerging protocols like MCP or A2A, Straiker is actively researching and building security capabilities for these standards as they evolve. We discover vulnerabilities in how agents integrate with tools and communicate with each other, then translate those findings into products that protect your implementations.

The challenge for high-tech isn't choosing between velocity and security. It's finding partners who understand that both matter. Straiker provides the industry's most advanced AI security products, backed by ongoing research that ensures your defenses evolve as fast as the threats.

Yes, it's early innings. But we're learning alongside the teams building the future of AI, discovering together what securing autonomous agents actually requires. Contact us to discuss how Straiker can help secure your deployments and advance your capabilities at the frontier.

High-tech companies face many of the same AI security challenges as financial services and healthcare: prompt injection, data leakage, tool misuse. Like banks, they need to prevent unauthorized actions when agents have system access. Like hospitals, they must validate that AI outputs don't cause harm. And the risks are already materializing: 80% of organizations report encountering risky behaviors from AI agents, including improper data exposure and unauthorized system access.

But high-tech operates under fundamentally different constraints. Financial services is focused on operationalizing AI within regulatory frameworks. Healthcare adopts AI with intense scrutiny on patient safety. High-tech companies, by contrast, are inventing the future of AI: building AI-native products, experimenting with autonomous agents, and pushing capabilities that don't have established security patterns yet.

The difference isn't complexity. Most high-tech companies aren't running elaborate multi-agent orchestrations. The difference is velocity plus experimentation. You're securing systems that didn't exist six months ago, using approaches that haven't been documented, while foundation model providers update capabilities monthly. And you can't wait for security to "catch up" because competitors are shipping AI features now.

Four Characteristics That Makes High-Tech Different

High-tech teams are:

- Building AI-native products where AI isn't a feature, it's the core experience. The entire application is an agent reasoning about user intent and taking actions.

- Experimenting with capabilities that push boundaries: tool use, memory, web access, code execution. They're testing what's possible, not implementing what's proven.

- Moving at competitive speed where advantage is measured in weeks. Waiting for comprehensive security frameworks means falling behind.

- Dealing with constant change as foundation model providers update models, change behavior, and introduce new capabilities monthly.

You're securing systems that didn't exist six months ago using approaches that haven't been documented yet.

The Reality of High-Tech AI Security

Most AI coding agents run unsecured. Teams using Cursor, Windsurf, or GitHub Copilot rarely put guardrails on them. Additionally, shadow AI is everywhere. Beyond coding assistants, teams are spinning up AI tools without security review like chatbots, document analyzers, workflow automations. IT and security teams often don't know these agents exist until something breaks. The velocity argument wins: developers need unrestricted AI assistance. Adding controls that slow code completion creates friction teams won't tolerate. The risk is real (full codebase access, environment variables, database queries), but productivity gains outweigh security concerns for most teams.

Multi-agent systems are still rare. Despite the hype, most organizations aren't running complex orchestrations in production. Infrastructure like MCP (Model Context Protocol) and A2A (Agent2Agent) is still being defined... and with it, new attack surfaces. MCP enables agents to connect with external tools; A2A enables agents to communicate with each other. Both protocols are evolving faster than their security models, creating vulnerabilities we're actively discovering and testing. What I'm seeing are simpler patterns: agents with tool access, agents that chain operations, agents augmented with retrieval.

Novel vulnerabilities emerge from experimentation. When companies push boundaries, they encounter risks that don't exist in any security framework. For example, our STAR Labs research recently discovered a critical vulnerability in Perplexity's AI browser agent: a tool misuse exploit that could wipe a user's entire Google Drive through carefully crafted prompts. The agent's legitimate file management capabilities became an attack vector when manipulated correctly. This wasn't in any threat database because the capability itself was novel. STAR Labs independently researches emerging AI and agent technologies to discover these threats before they're exploited at scale.

Where Straiker Helps

1. AI Security Products for Enterprise Applications

Straiker provides two core products for enterprises securing their AI applications:

Ascend AI: Automated adversarial testing against AI agents and systems. Ascend AI itself is agentic, consisting of multiple agents built on unaligned models, meaning it isn't bounded by standard model safety guardrails. This architecture allows it to probe systems the way real attackers would. Many of the reconnaissance and attack strategies employed by Ascend AI are direct results of STAR Labs research, delivering the most optimized Attack Success Rates in the industry.

With Ascend AI, enterprises test their systems against both widely known prompt injections and novel exploits discovered by our STAR team. When STAR Labs discovers a new attack pattern like the Comet browser tool misuse vulnerability, that attack signature gets integrated directly into Ascend AI's testing suite. Every customer benefits from frontier research without needing their own security research team.

Defend AI: Runtime guardrails built on a medley of models trained and tuned to detect problems with both input and output, including prompt injection, PII leakage, and policy violations. But Defend AI is the only AI guardrail solution on the market that goes deeper into the entire agentic trace, stopping tool misuse, excessive agency, and other cognitive failures that emerge from multi-step agent reasoning.

Traditional guardrails check what goes in and what comes out. Defend AI analyzes the complete execution trace: every tool call, every reasoning step, every data access. This visibility is critical for catching sophisticated attacks that manifest through agent behavior, not just malicious inputs.

Attack to Defend: You can't stop attacks unless you know how attacks are executed. STAR Labs research feeds both products: novel attack patterns discovered through research enhance Ascend AI's testing capabilities, while defense signatures developed from those attacks strengthen Defend AI's runtime protection. Together, they create the highest efficacy both in attack success rate and defense accuracy.

2. Deep Research and AI Engineering Support for Advanced Customer Deployments

For enterprises with cutting-edge AI deployments (those building custom models, deploying highly complex agent architectures, or pioneering capabilities without established security patterns), STAR Labs offers specialized research engagements.

Our Forward Deployed Engineers (FDEs) and STAR Labs researchers work hands-on with your engineering teams to test and harden advanced AI systems:

Custom model security validation: For enterprises building their own foundation models or fine-tuning for specialized domains, we validate security properties unique to custom model behavior. This includes testing for emergent capabilities, regression from base model updates, and vulnerabilities introduced through fine-tuning processes.

Novel protocol security research: As MCP and A2A standards evolve, we discover vulnerabilities in how agents integrate with tools and communicate with each other. Our research has shown that agents can be deceived and manipulated similarly to humans, making them prime targets for exploitation through social engineering techniques adapted for AI cognition.

Cognitive vulnerability testing: We validate whether your agents fall victim to authority bias, urgency manipulation, and trust-building over multiple turns. This is particularly critical for high-stakes decision-making agents in complex enterprise workflows.

Research-to-Product Pipeline: Every novel exploit STAR Labs discovers in customer engagements becomes:

- An attack pattern in Ascend AI's testing suite

- A defense signature in Defend AI's runtime guardrails

- Documented methodology advancing the field

3. Independent Research Creating Continuous Product Improvement

STAR Labs doesn't just work with customers. We independently research new AI and agent technologies as they emerge, proactively discovering vulnerabilities before they're exploited at scale.

Proactive threat discovery: The Comet browser vulnerability exemplifies this approach. STAR Labs independently analyzed Perplexity's new AI browser, discovered a critical tool misuse exploit that could wipe a user's entire Google Drive, documented the complete attack methodology, and integrated those learnings into our products. Every enterprise using Straiker can now test if their file management agents have similar vulnerabilities.

Continuous learning without customer data: Our attack and defense models improve through reinforcement learning with AI and human-in-the-loop training. When we discover new evasion strategies or exploitation techniques, we document the attack methodology: the structural patterns of tool misuse, the linguistic features of successful prompt injections, the behavioral signatures of boundary violations. These patterns enhance our models' efficacy without ever using customer data. Your agents' interactions remain private; the attack strategies we develop become shared defense for the entire ecosystem.

Force multiplier for all customers: STAR Labs acts as a continuous improvement engine. All Straiker customers benefit from ongoing research advancing the state of agent security. As new AI capabilities emerge, as protocols evolve, as novel attack vectors are discovered, those insights flow directly into product enhancements that protect your deployments.

Four Common Vulnerabilities We're Finding

The security approaches being pioneered in high-tech today will define how other industries secure AI later—and frameworks like the OWASP Top 10 for Agentic Applications (which we’re an active contributor to) are just beginning to codify what frontier teams have learned through experience.

Agent Tool Misuse: Legitimate tool access (database queries, API calls, workflow triggers) manipulated into unauthorized operations. Testing reveals if prompts can trick agents into tool use patterns they shouldn't perform.

Context Manipulation: Agents processing user content (documents, forms, conversations) can have behavior modified by embedded instructions. We test if adversarial content can alter agent reasoning.

Model Update Regression: When providers update base models, behavior can change in ways that introduce new vulnerabilities. Continuous testing catches these regressions automatically.

Memory Poisoning: Agents with persistent state can have memory corrupted by adversarial inputs, affecting future interactions. We validate if agents properly verify consistency before updating stored information.

The Early Innings Reality

We're genuinely in early innings. Infrastructure is being defined. Threats are emerging. Frontier labs are making fundamental discoveries about AI cognition. But high-tech companies can't wait for security to mature before shipping AI features.

If you're building customer-facing AI products or internal agents with system access, start with Ascend AI and Defend AI. Secure your deployments with automated adversarial testing and runtime guardrails that don't kill velocity. You get immediate protection plus continuous updates as STAR Labs discovers new attack patterns.

If you're pushing AI boundaries with custom models, novel architectures, or experimental capabilities, engage STAR Labs for deep research partnerships. Our Forward Deployed Engineers and researchers work hands-on with your team to discover and mitigate vulnerabilities that don't exist in any framework yet. You're not just getting security tools, you're augmenting your team with experts in AI security research.

If you're navigating emerging protocols like MCP or A2A, Straiker is actively researching and building security capabilities for these standards as they evolve. We discover vulnerabilities in how agents integrate with tools and communicate with each other, then translate those findings into products that protect your implementations.

The challenge for high-tech isn't choosing between velocity and security. It's finding partners who understand that both matter. Straiker provides the industry's most advanced AI security products, backed by ongoing research that ensures your defenses evolve as fast as the threats.

Yes, it's early innings. But we're learning alongside the teams building the future of AI, discovering together what securing autonomous agents actually requires. Contact us to discuss how Straiker can help secure your deployments and advance your capabilities at the frontier.

Related Resources

Click to Open File

similar resources

Secure your agentic AI and AI-native application journey with Straiker

.avif)