10 Hard-Won Lessons from building an AI Security company in 2025

Straiker CEO's honest reflections on what it takes to build an AI security company when chatbots become agents, acquisitions accelerate, and customers still don't know what they need.

.png)

2025 was the year AI stopped being a tool and started taking action. It stopped being something we experimented with and started doing real work in production. Autonomous systems began driving cars, writing code, and running workflows with far less human oversight than before.

That shift created massive opportunity, and it also created risks most enterprises are only starting to understand. As the co-founder and CEO of an AI security company, I watched this change happen up close.

These are ten reflections from a year spent building at the intersection of AI and security. They reflect what I learned about the market, the work, and the people who matter when things get hard.

#1 – AI progress moved into the mainstream in 2025.

2025 was the first year people routinely encountered autonomous AI systems — on roads, in factories, and in software workflows — signaling a shift from tools that assist to systems that act independently.

- ChatGPT usage reached population-scale adoption with roughly 800 million weekly active ChatGPT users worldwide.

- Coding agents demonstrated persistent autonomy and it still feels like we’re in the early days.

- In physical AI, autonomous driving became visible in everyday life. Waymo, Cruise and Tesla removed safety drivers in multiple U.S. cities. As of December 2025, Waymo has provided over 14 million rides this year alone and now averages 450,000+ rides per week. Autonomous robots moved from controlled demos into live industrial environments. Tesla showed Optimus operating in multi-hour autonomous pick-and-place and parts-handling tasks. Figure began commercial deployment agreements following multi-hour autonomous warehouse runs.

#2 – 2025 became the year enterprises shifted from chatbots to autonomous agents.

Enterprise AI adoption moved beyond chatbot interfaces toward agents that could plan tasks, call tools, and execute workflows independently. The shift was visible in real deployments and in forward-looking spending and architecture plans.

- 40% of enterprise applications will embed autonomous agents by 2026, up from less than 5% in early 2025. This forecast alone shows that enterprises now view execution-capable AI as strategic—not experimental.

- McKinsey’s State of AI 2025 found 23% of organizations scaling agentic systems, with another 39% actively experimenting in workflow use cases.

From my customer conversation, agent deployment in production hasn’t hit mainstream adoption yet but just about every enterprise I’ve talked to is going in that direction.

#3 – The enterprise shift toward AI Agents was made possible by step-function improvements in models.

The move from chatbots to autonomous agents wasn’t only a design choice. It became possible because foundation models in 2025 crossed capability thresholds that made planning, tool use, and long-context reasoning reliable enough for enterprise workflows.

- GPT-5 / GPT-5.2 (OpenAI) improved reasoning consistency and long-context handling, allowing agents to remember steps across a workflow instead of resetting every few prompts.

- Gemini 3.0 (Google) built on lessons from the more efficient Nano Banana family of models and delivered stronger multimodal grounding and faster tool integration, enabling practical automation involving screenshots, structured docs, and UI flows.

- Claude 4.x (Anthropic) improved planning reliability and tool-calling success rates, making agents less brittle and more predictable over multiple steps.

- Qwen 2 (Alibaba) advanced open-weight performance with strong tool-use benchmarks, enabling enterprises to experiment with agents on infrastructure they could control.

- DeepSeek-V3 (DeepSeek AI) pushed efficient training + deployment for high-capacity models, accelerating large-scale open-weight adoption in Asia and lowering compute barriers for enterprise agent development.

At Straiker, fine tuning on top open source models have served us well for Attack (Red Teaming) and Defense (Guardrails) but we’re keeping our options open even though it’s painful to swap out models and retrain. It’s too early to pick winners at this stage of the game.

#4 – The Agentic AI Application race began to settle in 2025.

As models improved and agents became viable, the Agentic AI Application eco-system is starting to settle and winners are starting to emerge. I use a lot of Agentic AI apps everyday and my personal take is AI-native apps (Coding, Productivity, Sales) are way better than AI-bolted on top of existing SaaS. Incumbents are “supposed” to have the data advantage but all of them have botched on the experience and execution.

- Coding: GitHub Copilot surpassed 20 million users globally and is now used by over 90% of Fortune 100 companies, showing deep integration in enterprise engineering workflows. Enterprise adoption grew ~75% quarter-over-quarter in 2025. Other coding tools like Claude Code and Cursor are increasingly adopted across engineering teams as part of multi-tool workflows, with surveys showing ~90% of engineering teams using AI coding tools regularly.

- Customer Service: Sierra AI has emerged in 2025 as a leading agentic support automation platform. In our personal experience, the market still appears to be fragmented - we have customers using Sierra, Decagon and homegrown support apps.

- Productivity: Microsoft Copilot (across 365 + Teams) is used by nearly 90% of Fortune 500 companies, with hundreds of thousands of active organizations using embedded AI to automate document work, summarization, and task creation. Glean has gained traction as an AI-driven knowledge assistant, helping enterprises surface relevant information across disconnected systems — with multiple reports noting adoption in Fortune 500 environments and measurable employee time savings. 8/10 customers we talk to are on MS co-pilot. This is not a statement about the effectiveness of the tool with Glean and Google bundled as the next 2.

- Sales: Although Agentforce has created a lot of buzz in the market with their Sales AI, I believe there are clear winners yet. We’ve tried several Sales AI add-ons (call recorders, AI-SDR, top of funnel tools) on top of existing CRM solutions and none of them have been impressive. Call recorders add value, but it’s clear that most Sales AI tools have incremental improvements. CRM itself has not been reimagined for an AI-first world, leaving teams dependent on incumbent platforms that rely on bolted-on AI rather than native intelligence.

- Legal: Harvey AI became a go-to platform for legal automation in 2025, widely adopted in law firms and legal departments for contract review, legal research, and compliance tasks — with several organizations reporting time savings of 50–70% on document review cycles.

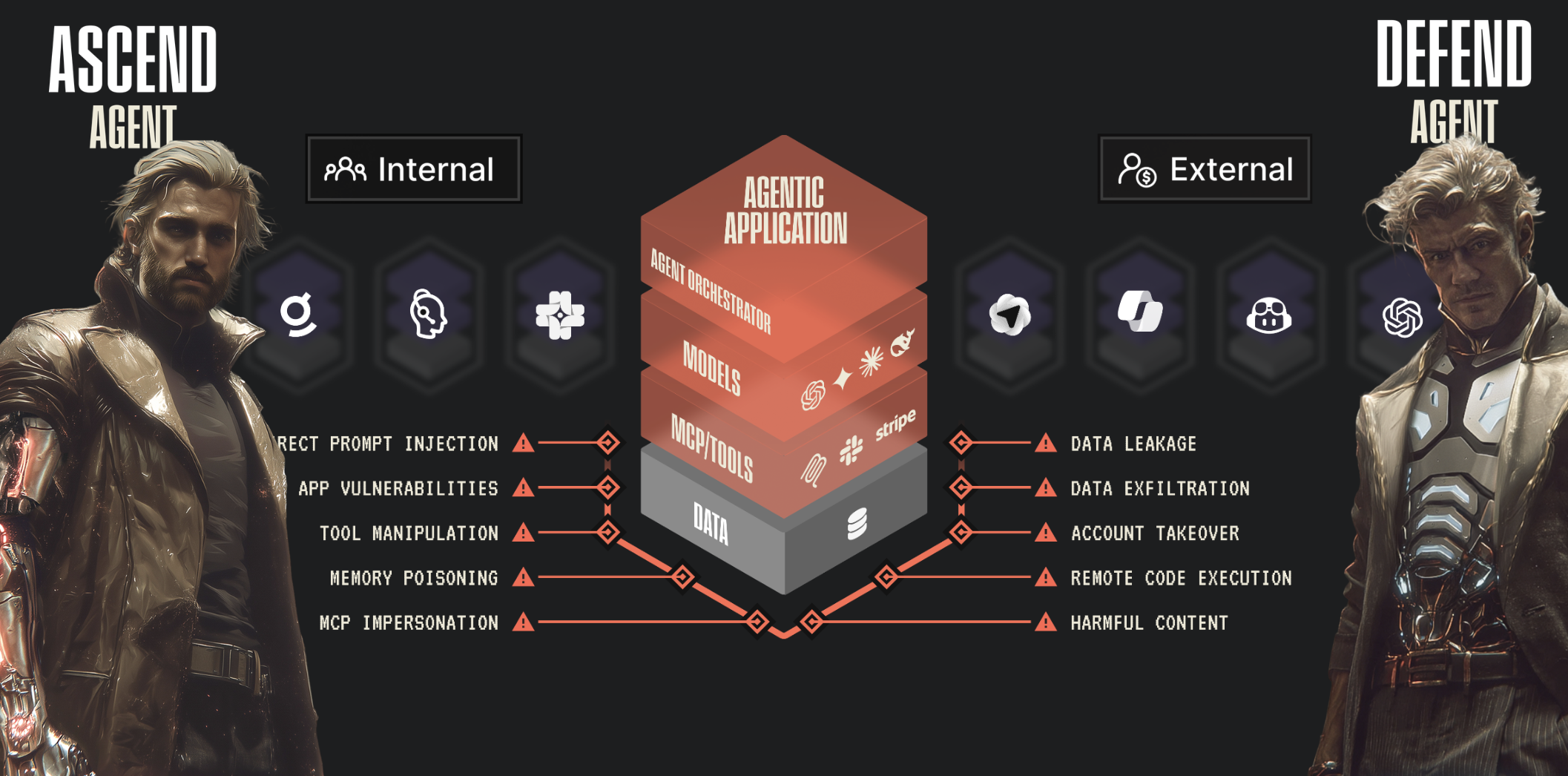

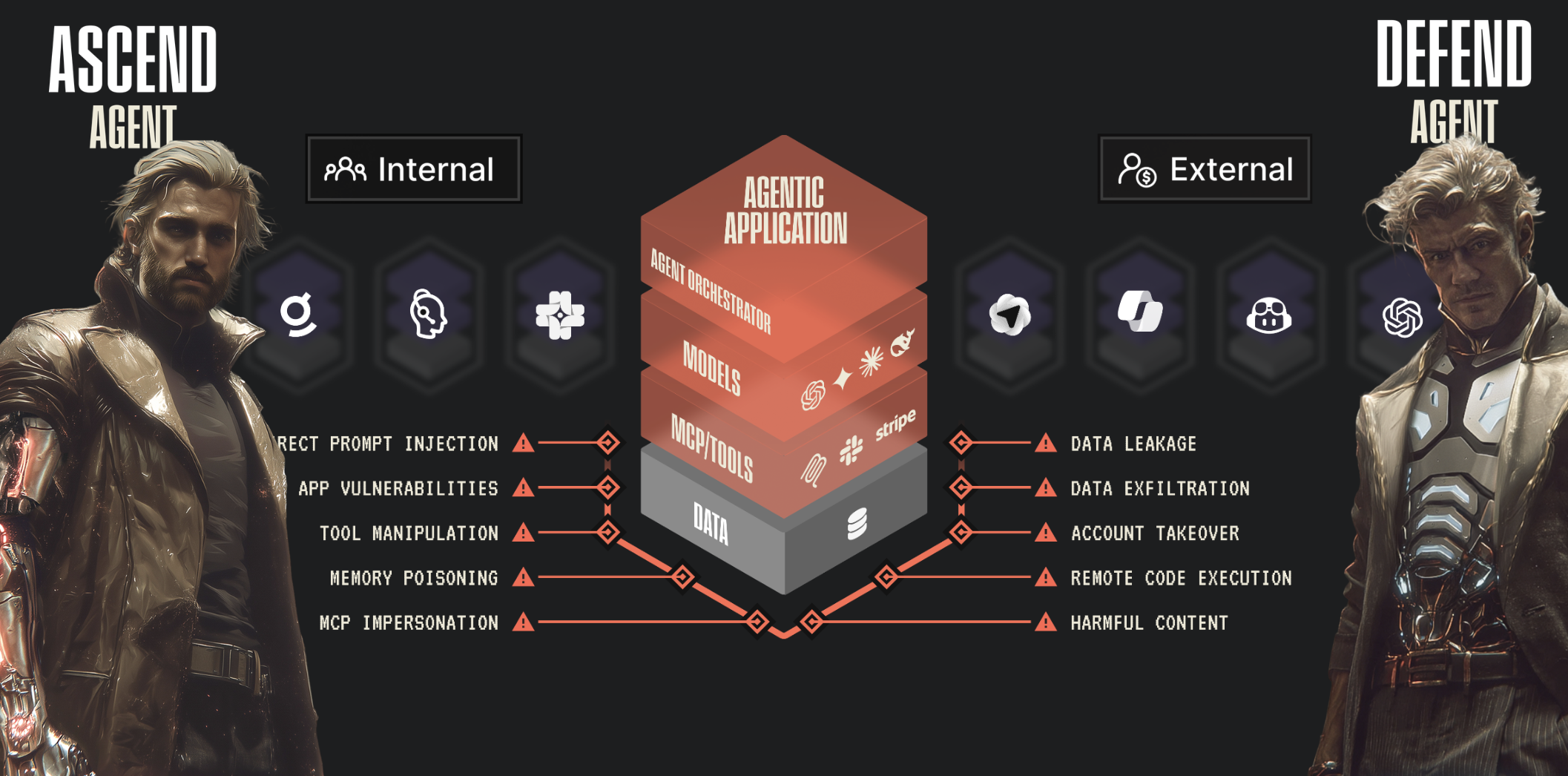

#5 – AI security concerns shifted from prompt injection to securing autonomous agents, driven by real incidents in 2025.

As Agentic AI Apps start to become mainstream, security is top of mind for all enterprises. We are yet to talk to a large bank or a healthcare company where AI security hasn’t already bought a solution or is in the eval process. While the early AI security efforts mostly focused on preventing harmful text output or prompt injection attacks in chat interfaces. But 2025 exposed a different, more serious threat: autonomous agents interacting with real systems, tools, workflows, and browsers — where the damage potential is exponential, not conversational.

Public incidents and research accelerated this shift:

- Anthropic Disclosed a nation-state attempt to operationalize autonomous AI for high-tempo cyber operations, marking one of the first public confirmations of adversaries exploring autonomous AI for offense.

- On the Enterprise incidents, Lenovo’s chatbot was breached, McDonald’s hiring chatbot (McHire) had a major data breach.

- Straiker researchers uncovered “Villager” an AI-augmented penetration testing toolkit distributed via PyPI that surpassed 10,000 downloads in months, demonstrating how agentic automation lowers the skill and effort required to run coordinated attack chains.

- Straiker documented vulnerabilities in Perplexity’s Comet browser agent that could enable OAuth escalation across connected services, referenced in major media coverage and reinforcing how browser-level agents expand the attack surface in ways traditional web security controls don’t anticipate.

These incidents highlighted risks unique to agents: Indirect prompt injections, Data poisoning, Unsafe multi-step tool/API execution, Privileged browser automation, memory/state persistence leading to escalation and workflow-level access pathways

Enterprise sentiment reflected this shift. A Gartner survey in September 2025 found 71% of security and risk leaders believe agent security will require new controls beyond LLM prompt-level protections, and over 40% planned budget increases for AI/agent security in 2026 as a direct response.

#6: AI Security saw the fastest M&A cycle in Cyber

Once the magnitude of this opportunity became obvious, platform players didn’t wait for the category to mature. They moved immediately. We are already in a year of consolidation. Palo Alto Networks acquired Protect AI, Zscalar acquired SPLX, Crowdstrike acquired Pangea, SentinelOne acquired Prompt Security, Cato Networks acquired AIM Security and Check Point acquired Lakera. These aren’t feature tuck-ins, they are a strategic lever for growth for the incumbents.

I’ve seen this movie play out in Mobile security, CASB (SaaS security), CNAPP (Cloud security). If history is any indication, pure-play AI-native security companies like Straiker will prevail. This is too important a category for a stitched together solution that takes forever to integrate to own.

#7: Customers are clear on the “Why” but not the “What” and the “How”

After talking to 500+ customers across sizes, industries, and AI maturity levels, one thing is clear: everyone wants to do “AI security” — but almost no one starts with the same definition.

Is AI security:

- AI Visibility and governance?

- AI Red teaming and risk assessment?

- AI Runtime guardrails?

- All of the above — as a platform?

What’s the biggest threat vector? Injection attacks (direct and indirect), data leakage, data exfiltration, toxicity, web exploit using natural language - all of the above?

Is it for simple chatbots or agentic AI? And if agents — which architecture? Homegrown or using an orchestrator like Crew AI or built on hyperscalar platforms like Amazon Bedrock Agent core, Azure Agent Foundry? Is tool access exclusively over MCP? RAG-heavy?

The good news: there’s far more clarity today than even six months ago. Having talked to 500+ enterprises, the general consensus is to have a program in place for AI Red Teaming and Guardrails for Agentic AI. Enterprises are prioritizing external facing Agentic AI apps first and then the internal apps.

#8: “Team you build is the company you build”

A high-functioning team can make or break a company. Having built teams across e-commerce, mobile, SaaS, and cloud security, it would have been easier to assemble a familiar crew from the past. But it was clear early on that we’re playing baseball, not cricket — the rules, pace, and skills required are fundamentally different.

Building the world’s best AI security company demands a team at the intersection of AI and security. Over-rotate on AI and you risk building something that’s more safety than security. Over-rotate on traditional security and you end up repackaging AppSec, XDR, or web security concepts that simply won’t uncover cutting-edge AI exploits.

True AI security requires deep security intuition paired with strong AI engineering — people who can turn real-world data into models, continuously fine-tune systems, and operate across both attack and defense in production environments.

Beyond skill sets, the best teams share three defining traits — the three I’s: Intellect, Integrity and Intensity.

#9: Clarity over completeness in building a winning product

I’ve been part of multiple 0-to-scale products across five startups and four large companies. While the formula for winning products keeps evolving, a few fundamentals — like simplicity — never change. One hard-earned lesson is that only two or three killer features actually matter — the ones that deliver the most value to users. The hard part isn’t building them; it’s figuring out which ones they are.

That sounds obvious, yet most products get this wrong. Customers often can’t articulate what they truly need, and internal incentives almost always push teams to build more. The result is bloat instead of clarity.

As we built Ascend AI (red teaming) and Defend AI (guardrails), we forced ourselves to ask uncomfortable questions:

- Do we need a traditional UI, a chatbot UI, or an Agent that automates a bunch of work?

- Should detections rely on a policy engine, AI, or both?

- Do we need alerts and workflows?

- Does every product really need a dashboard?

- Are we building a broad platform — or solving a very specific problem extremely well?

We’re still early in the customer adoption cycle, and there’s a lot we don’t yet know. But one thing became clear quickly. For Ascend AI, what mattered most was attack success rate (ASR) - how successful were we in breaking the Agent. For Defend AI, it was low latency and high accuracy (low FP/FN) in production. Every product decision flowed from improving those metrics.

We don’t yet know if we’ve gotten everything right — but we do know we have a clear thesis. And in early markets, that clarity matters more than completeness.

#10: Who Shows Up When It Gets Hard

When you’re building—and things get hard, uncertain, or lonely—you learn something very quickly: A lot of people cheer when things are going well. Very few show up when they’re not.

In those moments, a small number of people emerge as true helpers. Not because they have to. Not because it benefits them. But because they choose to lean in when it would be easier not to. They ask the hard questions. They give honest feedback. They make customer introductions. They show up when there’s no spotlight.

We have been incredibly lucky to have several champions of the company, you know who you are. I’m deeply grateful for the people who showed up when it mattered most.

Closing Thoughts

2025 was the year AI moved from tools to action. These reflections capture what it took to build through that shift — in product, security, and team. The implications won’t stop here. 2026 is when these AI agents go live and AI security becomes mainstream. I’ll share my predictions next.

2025 was the year AI stopped being a tool and started taking action. It stopped being something we experimented with and started doing real work in production. Autonomous systems began driving cars, writing code, and running workflows with far less human oversight than before.

That shift created massive opportunity, and it also created risks most enterprises are only starting to understand. As the co-founder and CEO of an AI security company, I watched this change happen up close.

These are ten reflections from a year spent building at the intersection of AI and security. They reflect what I learned about the market, the work, and the people who matter when things get hard.

#1 – AI progress moved into the mainstream in 2025.

2025 was the first year people routinely encountered autonomous AI systems — on roads, in factories, and in software workflows — signaling a shift from tools that assist to systems that act independently.

- ChatGPT usage reached population-scale adoption with roughly 800 million weekly active ChatGPT users worldwide.

- Coding agents demonstrated persistent autonomy and it still feels like we’re in the early days.

- In physical AI, autonomous driving became visible in everyday life. Waymo, Cruise and Tesla removed safety drivers in multiple U.S. cities. As of December 2025, Waymo has provided over 14 million rides this year alone and now averages 450,000+ rides per week. Autonomous robots moved from controlled demos into live industrial environments. Tesla showed Optimus operating in multi-hour autonomous pick-and-place and parts-handling tasks. Figure began commercial deployment agreements following multi-hour autonomous warehouse runs.

#2 – 2025 became the year enterprises shifted from chatbots to autonomous agents.

Enterprise AI adoption moved beyond chatbot interfaces toward agents that could plan tasks, call tools, and execute workflows independently. The shift was visible in real deployments and in forward-looking spending and architecture plans.

- 40% of enterprise applications will embed autonomous agents by 2026, up from less than 5% in early 2025. This forecast alone shows that enterprises now view execution-capable AI as strategic—not experimental.

- McKinsey’s State of AI 2025 found 23% of organizations scaling agentic systems, with another 39% actively experimenting in workflow use cases.

From my customer conversation, agent deployment in production hasn’t hit mainstream adoption yet but just about every enterprise I’ve talked to is going in that direction.

#3 – The enterprise shift toward AI Agents was made possible by step-function improvements in models.

The move from chatbots to autonomous agents wasn’t only a design choice. It became possible because foundation models in 2025 crossed capability thresholds that made planning, tool use, and long-context reasoning reliable enough for enterprise workflows.

- GPT-5 / GPT-5.2 (OpenAI) improved reasoning consistency and long-context handling, allowing agents to remember steps across a workflow instead of resetting every few prompts.

- Gemini 3.0 (Google) built on lessons from the more efficient Nano Banana family of models and delivered stronger multimodal grounding and faster tool integration, enabling practical automation involving screenshots, structured docs, and UI flows.

- Claude 4.x (Anthropic) improved planning reliability and tool-calling success rates, making agents less brittle and more predictable over multiple steps.

- Qwen 2 (Alibaba) advanced open-weight performance with strong tool-use benchmarks, enabling enterprises to experiment with agents on infrastructure they could control.

- DeepSeek-V3 (DeepSeek AI) pushed efficient training + deployment for high-capacity models, accelerating large-scale open-weight adoption in Asia and lowering compute barriers for enterprise agent development.

At Straiker, fine tuning on top open source models have served us well for Attack (Red Teaming) and Defense (Guardrails) but we’re keeping our options open even though it’s painful to swap out models and retrain. It’s too early to pick winners at this stage of the game.

#4 – The Agentic AI Application race began to settle in 2025.

As models improved and agents became viable, the Agentic AI Application eco-system is starting to settle and winners are starting to emerge. I use a lot of Agentic AI apps everyday and my personal take is AI-native apps (Coding, Productivity, Sales) are way better than AI-bolted on top of existing SaaS. Incumbents are “supposed” to have the data advantage but all of them have botched on the experience and execution.

- Coding: GitHub Copilot surpassed 20 million users globally and is now used by over 90% of Fortune 100 companies, showing deep integration in enterprise engineering workflows. Enterprise adoption grew ~75% quarter-over-quarter in 2025. Other coding tools like Claude Code and Cursor are increasingly adopted across engineering teams as part of multi-tool workflows, with surveys showing ~90% of engineering teams using AI coding tools regularly.

- Customer Service: Sierra AI has emerged in 2025 as a leading agentic support automation platform. In our personal experience, the market still appears to be fragmented - we have customers using Sierra, Decagon and homegrown support apps.

- Productivity: Microsoft Copilot (across 365 + Teams) is used by nearly 90% of Fortune 500 companies, with hundreds of thousands of active organizations using embedded AI to automate document work, summarization, and task creation. Glean has gained traction as an AI-driven knowledge assistant, helping enterprises surface relevant information across disconnected systems — with multiple reports noting adoption in Fortune 500 environments and measurable employee time savings. 8/10 customers we talk to are on MS co-pilot. This is not a statement about the effectiveness of the tool with Glean and Google bundled as the next 2.

- Sales: Although Agentforce has created a lot of buzz in the market with their Sales AI, I believe there are clear winners yet. We’ve tried several Sales AI add-ons (call recorders, AI-SDR, top of funnel tools) on top of existing CRM solutions and none of them have been impressive. Call recorders add value, but it’s clear that most Sales AI tools have incremental improvements. CRM itself has not been reimagined for an AI-first world, leaving teams dependent on incumbent platforms that rely on bolted-on AI rather than native intelligence.

- Legal: Harvey AI became a go-to platform for legal automation in 2025, widely adopted in law firms and legal departments for contract review, legal research, and compliance tasks — with several organizations reporting time savings of 50–70% on document review cycles.

#5 – AI security concerns shifted from prompt injection to securing autonomous agents, driven by real incidents in 2025.

As Agentic AI Apps start to become mainstream, security is top of mind for all enterprises. We are yet to talk to a large bank or a healthcare company where AI security hasn’t already bought a solution or is in the eval process. While the early AI security efforts mostly focused on preventing harmful text output or prompt injection attacks in chat interfaces. But 2025 exposed a different, more serious threat: autonomous agents interacting with real systems, tools, workflows, and browsers — where the damage potential is exponential, not conversational.

Public incidents and research accelerated this shift:

- Anthropic Disclosed a nation-state attempt to operationalize autonomous AI for high-tempo cyber operations, marking one of the first public confirmations of adversaries exploring autonomous AI for offense.

- On the Enterprise incidents, Lenovo’s chatbot was breached, McDonald’s hiring chatbot (McHire) had a major data breach.

- Straiker researchers uncovered “Villager” an AI-augmented penetration testing toolkit distributed via PyPI that surpassed 10,000 downloads in months, demonstrating how agentic automation lowers the skill and effort required to run coordinated attack chains.

- Straiker documented vulnerabilities in Perplexity’s Comet browser agent that could enable OAuth escalation across connected services, referenced in major media coverage and reinforcing how browser-level agents expand the attack surface in ways traditional web security controls don’t anticipate.

These incidents highlighted risks unique to agents: Indirect prompt injections, Data poisoning, Unsafe multi-step tool/API execution, Privileged browser automation, memory/state persistence leading to escalation and workflow-level access pathways

Enterprise sentiment reflected this shift. A Gartner survey in September 2025 found 71% of security and risk leaders believe agent security will require new controls beyond LLM prompt-level protections, and over 40% planned budget increases for AI/agent security in 2026 as a direct response.

#6: AI Security saw the fastest M&A cycle in Cyber

Once the magnitude of this opportunity became obvious, platform players didn’t wait for the category to mature. They moved immediately. We are already in a year of consolidation. Palo Alto Networks acquired Protect AI, Zscalar acquired SPLX, Crowdstrike acquired Pangea, SentinelOne acquired Prompt Security, Cato Networks acquired AIM Security and Check Point acquired Lakera. These aren’t feature tuck-ins, they are a strategic lever for growth for the incumbents.

I’ve seen this movie play out in Mobile security, CASB (SaaS security), CNAPP (Cloud security). If history is any indication, pure-play AI-native security companies like Straiker will prevail. This is too important a category for a stitched together solution that takes forever to integrate to own.

#7: Customers are clear on the “Why” but not the “What” and the “How”

After talking to 500+ customers across sizes, industries, and AI maturity levels, one thing is clear: everyone wants to do “AI security” — but almost no one starts with the same definition.

Is AI security:

- AI Visibility and governance?

- AI Red teaming and risk assessment?

- AI Runtime guardrails?

- All of the above — as a platform?

What’s the biggest threat vector? Injection attacks (direct and indirect), data leakage, data exfiltration, toxicity, web exploit using natural language - all of the above?

Is it for simple chatbots or agentic AI? And if agents — which architecture? Homegrown or using an orchestrator like Crew AI or built on hyperscalar platforms like Amazon Bedrock Agent core, Azure Agent Foundry? Is tool access exclusively over MCP? RAG-heavy?

The good news: there’s far more clarity today than even six months ago. Having talked to 500+ enterprises, the general consensus is to have a program in place for AI Red Teaming and Guardrails for Agentic AI. Enterprises are prioritizing external facing Agentic AI apps first and then the internal apps.

#8: “Team you build is the company you build”

A high-functioning team can make or break a company. Having built teams across e-commerce, mobile, SaaS, and cloud security, it would have been easier to assemble a familiar crew from the past. But it was clear early on that we’re playing baseball, not cricket — the rules, pace, and skills required are fundamentally different.

Building the world’s best AI security company demands a team at the intersection of AI and security. Over-rotate on AI and you risk building something that’s more safety than security. Over-rotate on traditional security and you end up repackaging AppSec, XDR, or web security concepts that simply won’t uncover cutting-edge AI exploits.

True AI security requires deep security intuition paired with strong AI engineering — people who can turn real-world data into models, continuously fine-tune systems, and operate across both attack and defense in production environments.

Beyond skill sets, the best teams share three defining traits — the three I’s: Intellect, Integrity and Intensity.

#9: Clarity over completeness in building a winning product

I’ve been part of multiple 0-to-scale products across five startups and four large companies. While the formula for winning products keeps evolving, a few fundamentals — like simplicity — never change. One hard-earned lesson is that only two or three killer features actually matter — the ones that deliver the most value to users. The hard part isn’t building them; it’s figuring out which ones they are.

That sounds obvious, yet most products get this wrong. Customers often can’t articulate what they truly need, and internal incentives almost always push teams to build more. The result is bloat instead of clarity.

As we built Ascend AI (red teaming) and Defend AI (guardrails), we forced ourselves to ask uncomfortable questions:

- Do we need a traditional UI, a chatbot UI, or an Agent that automates a bunch of work?

- Should detections rely on a policy engine, AI, or both?

- Do we need alerts and workflows?

- Does every product really need a dashboard?

- Are we building a broad platform — or solving a very specific problem extremely well?

We’re still early in the customer adoption cycle, and there’s a lot we don’t yet know. But one thing became clear quickly. For Ascend AI, what mattered most was attack success rate (ASR) - how successful were we in breaking the Agent. For Defend AI, it was low latency and high accuracy (low FP/FN) in production. Every product decision flowed from improving those metrics.

We don’t yet know if we’ve gotten everything right — but we do know we have a clear thesis. And in early markets, that clarity matters more than completeness.

#10: Who Shows Up When It Gets Hard

When you’re building—and things get hard, uncertain, or lonely—you learn something very quickly: A lot of people cheer when things are going well. Very few show up when they’re not.

In those moments, a small number of people emerge as true helpers. Not because they have to. Not because it benefits them. But because they choose to lean in when it would be easier not to. They ask the hard questions. They give honest feedback. They make customer introductions. They show up when there’s no spotlight.

We have been incredibly lucky to have several champions of the company, you know who you are. I’m deeply grateful for the people who showed up when it mattered most.

Closing Thoughts

2025 was the year AI moved from tools to action. These reflections capture what it took to build through that shift — in product, security, and team. The implications won’t stop here. 2026 is when these AI agents go live and AI security becomes mainstream. I’ll share my predictions next.

Related Resources

Click to Open File

similar resources

Secure your agentic AI and AI-native application journey with Straiker

.avif)