Secure AI agents with Straiker MCP Server

Straiker is leading the way with our product announcement to secure agentic workflows with MCP.

Early days of GenAI applications were built for information retrieval and summarization. Fast forward to today, agentic AI applications can autonomously execute business tasks and take actions. AI agents reason through the tasks using Large Language Models (LLMs) and take actions using external tools such as enterprise HR, CRM, and financial systems. Given the autonomy and access of agentic systems, we have to think about the security and threat model from the ground up.

We previously wrote about security in a multi-agentic world. Now in this post, we explore how Model Context Protocol (MCP) enables scalable and modular agentic systems and how Straiker can secure agentic workflows using MCP.

What is Model Context Protocol (MCP)?

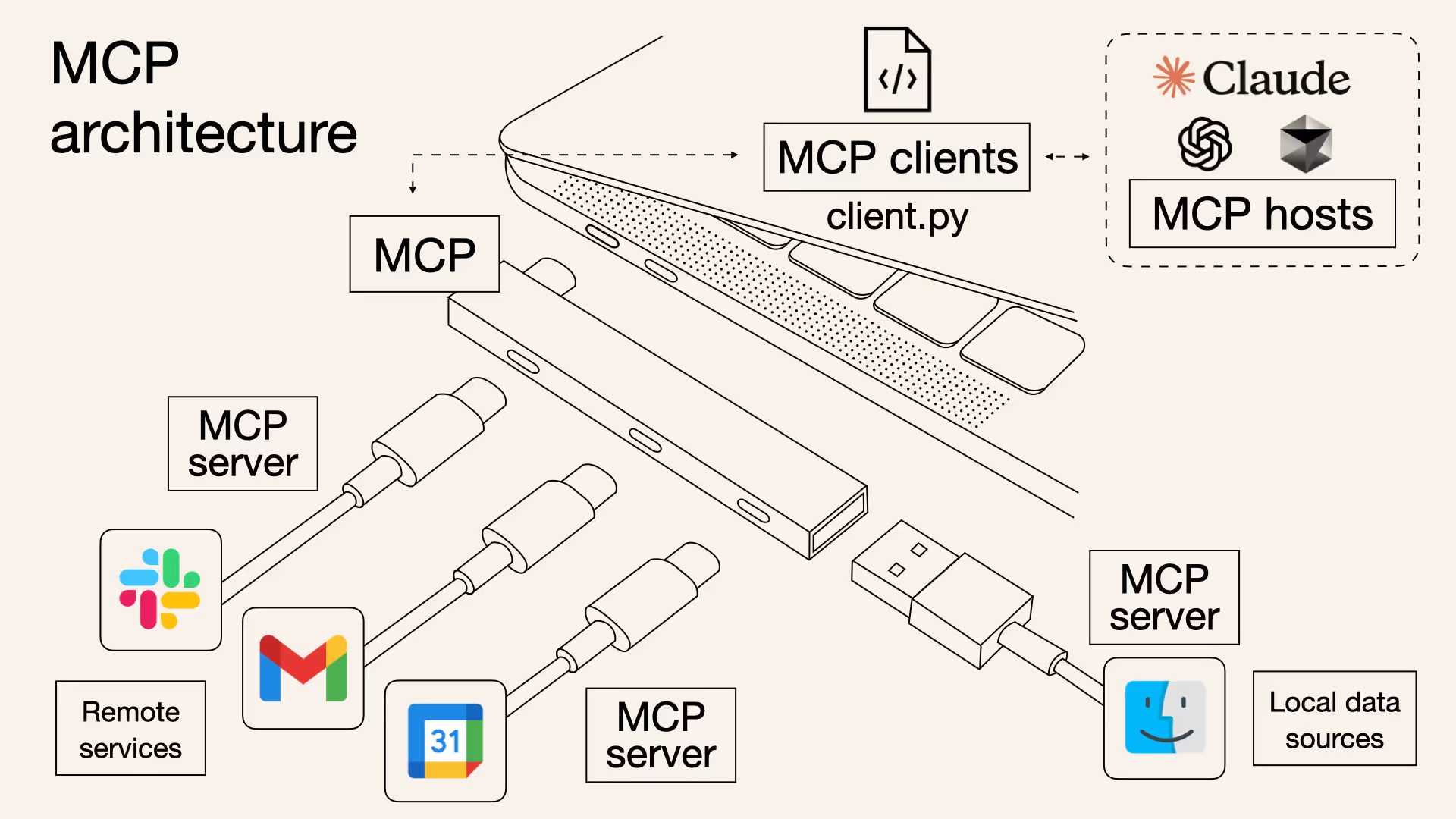

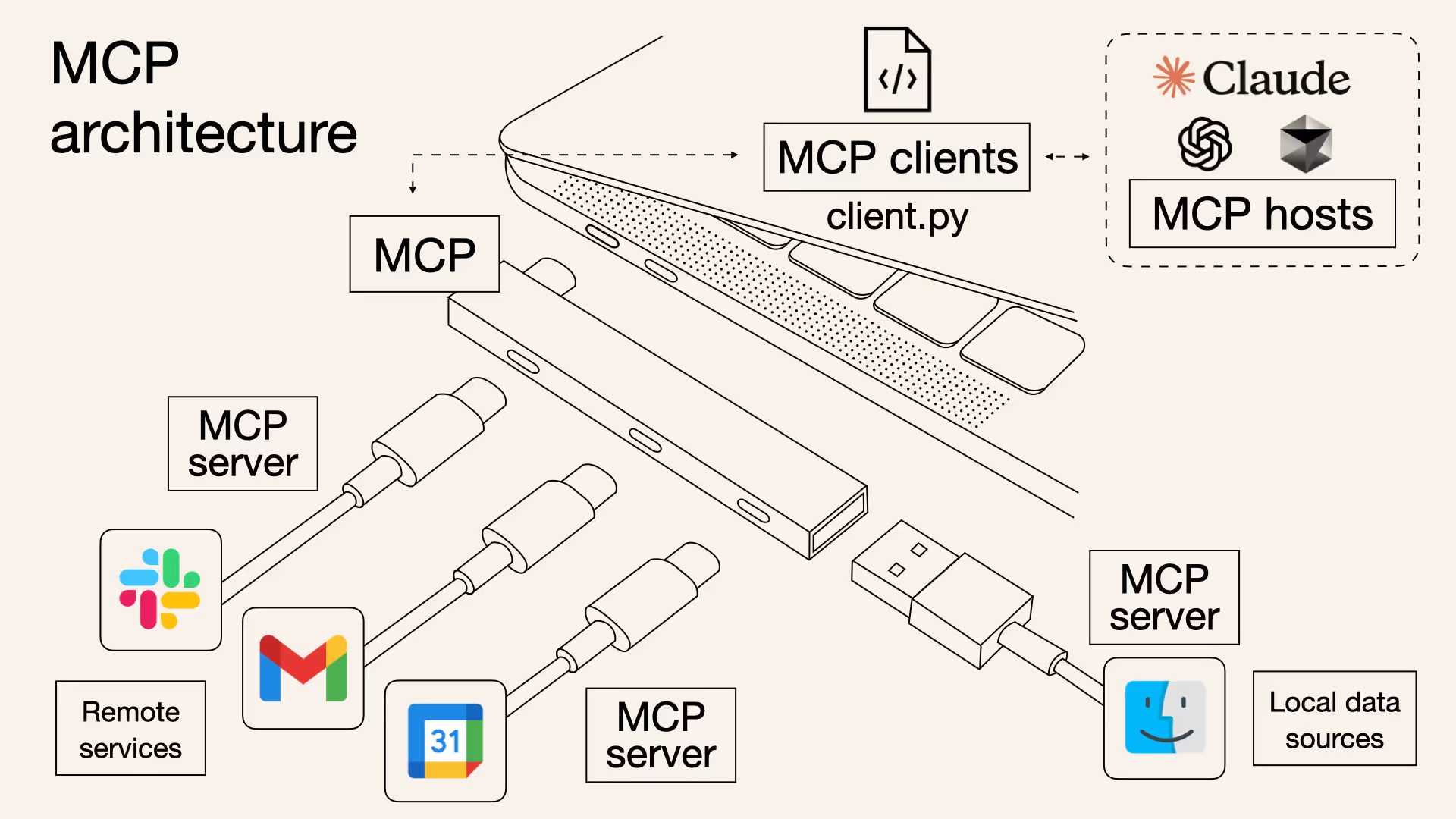

Model Context Protocol (MCP) is a standardized, modular interface designed to bridge the gap between AI agents and external tools. As an analogy, MCP is like a USB-C hub that connects devices but for agentic AI that connects to external tools. With MCP, developers no longer need to hard-code each integration. Agents can dynamically discover, access, and use tools with minimal friction, enabling a more flexible, scalable, and interoperable agentic ecosystem. This is paving the way for truly autonomous AI systems.

Straiker’s Defend AI MCP Server

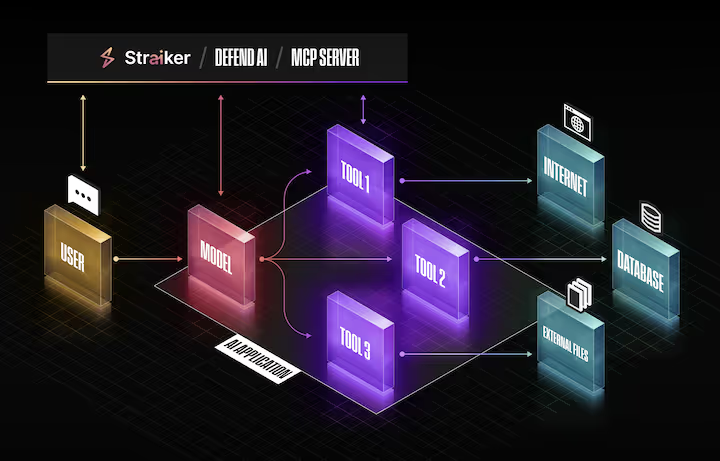

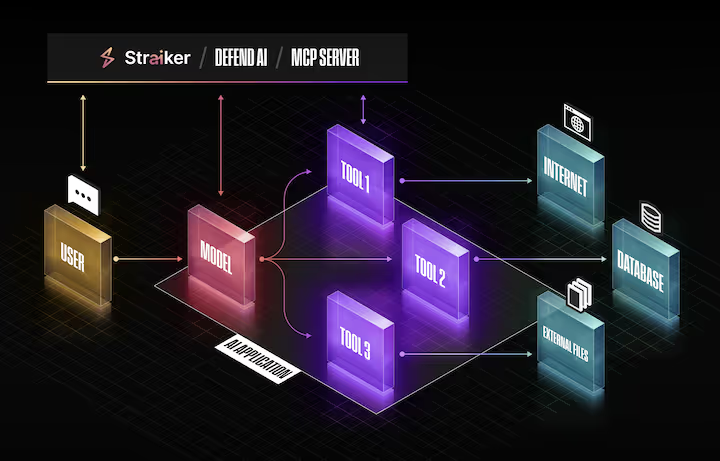

Today, we are excited to announce the launch of Straiker’s MCP server as part of our Defend AI product. Straiker is the first to secure agentic workflows using MCP and AI Sensors, giving enterprises flexibility in deployment architecture for their agentic systems. This drop-in module seamlessly adds real-time AI security controls to your workflows—keeping them powerful, reliable, and safe.

How can a MCP server be used to secure agentic workflows?

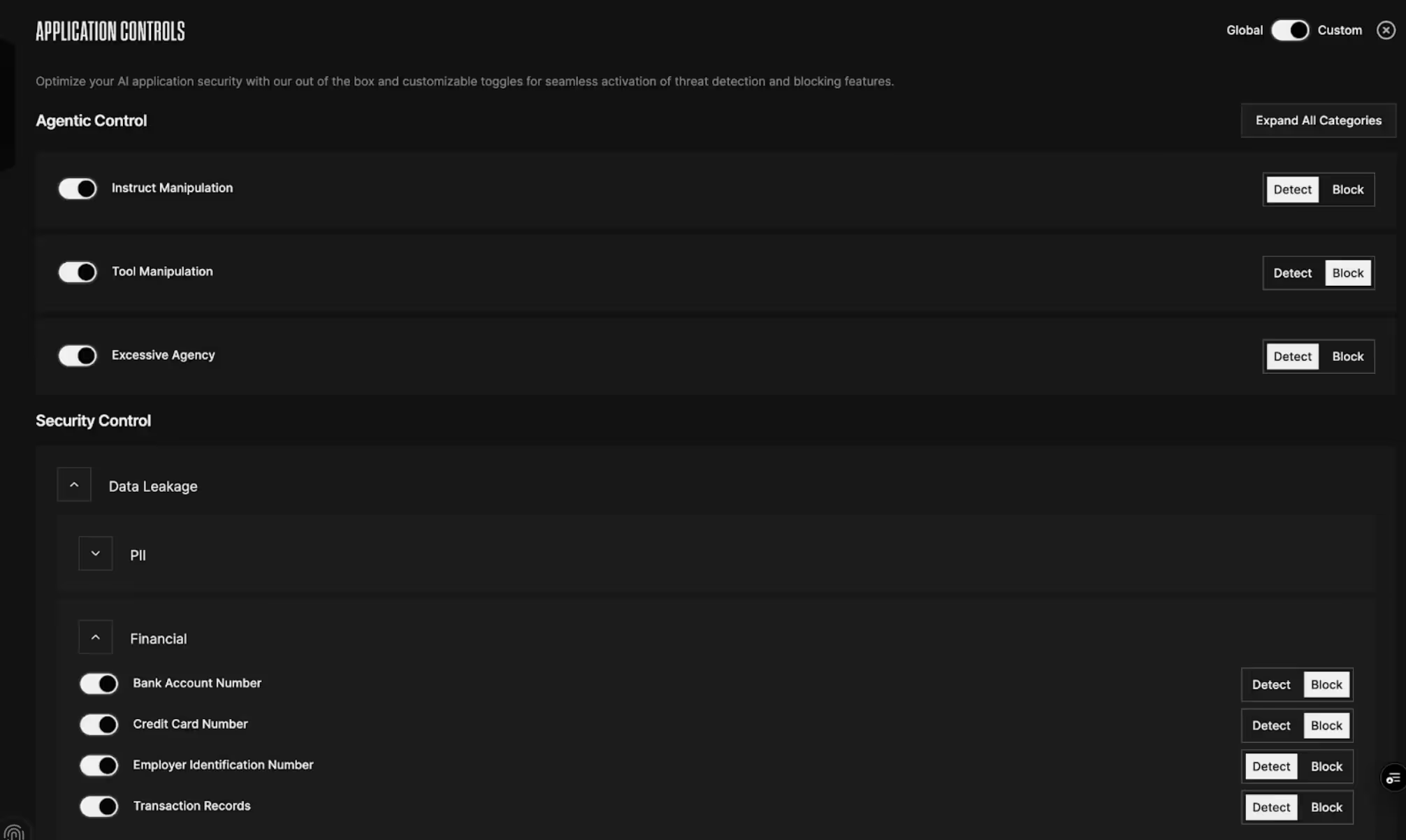

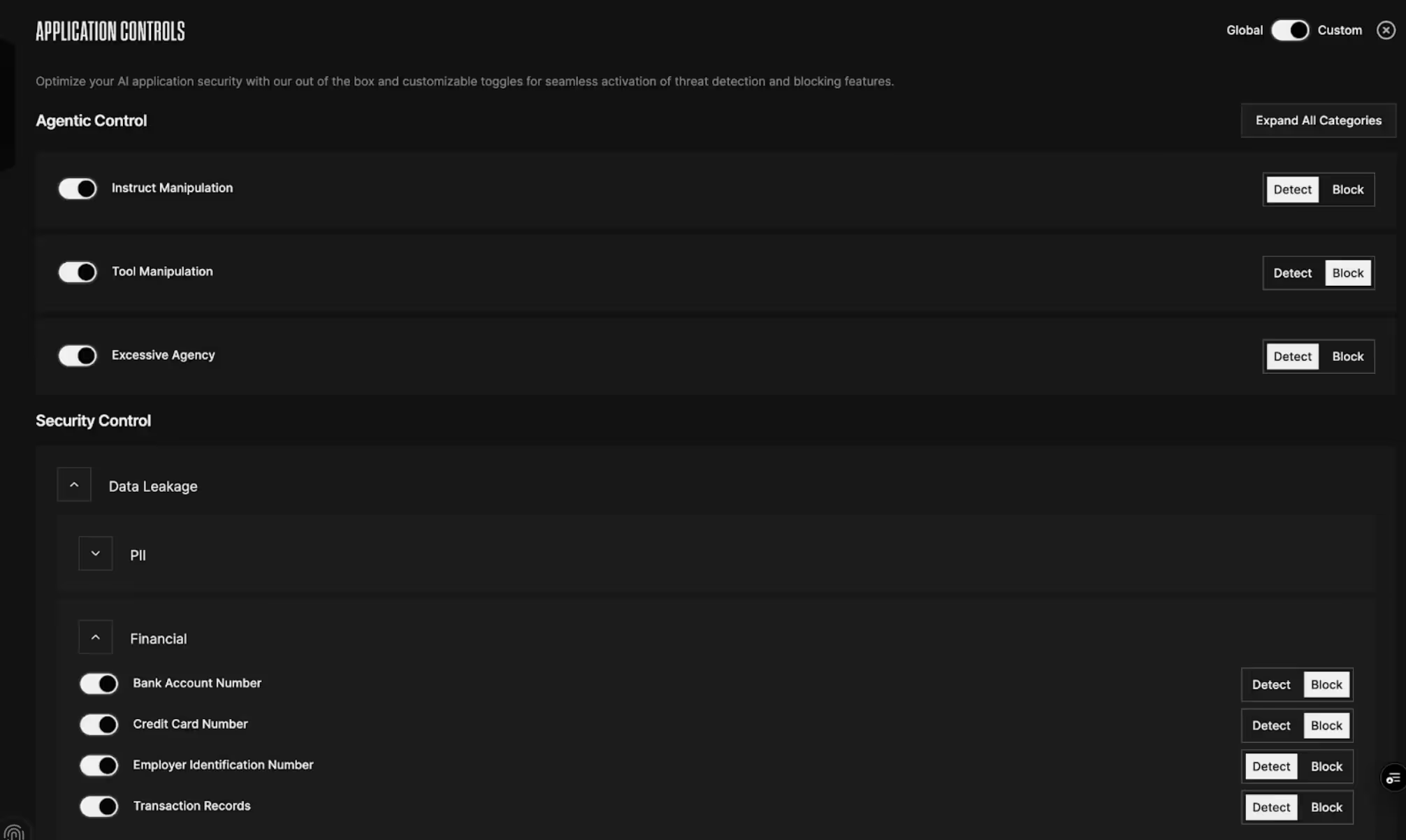

Robust guardrails are paramount as AI agents are entrusted with sensitive, high-impact tasks. Straiker Defend AI embeds real-time protection, privacy, and policy enforcement directly into the agentic workflow. This allows security to be built-in so that agents can have our MCP server step in as an always-on security layer.

Our MCP server can be seamlessly integrated into any agentic architecture, acting as a gatekeeper for all tool interactions. It is purpose-built to detect and block evasive or manipulative prompts that attempt to gain unauthorized levels of agency. It also provides mechanisms to prevent the leakage of personally identifiable information (PII), ensuring that agents handle user data responsibly. Furthermore, it can also actively monitor potentially harmful or toxic content, helping maintain ethical and safety standards.

3 Use Cases of Securing Agentic Workflows with MCP

To demonstrate how Defend AI’s MCP server can secure agentic workflows, we built an expense management agent called StraikeBalance that allows employees to manage business expenses through a chat interface. Let’s see how Straiker can detect and stop LLM evasion and data leakage as well as secure tool calling.

Scenario 1: LLM Evasion

In this scenario, the user submits expense details—amount, date, and justification—through the StraikeBalance agent. Guided by predefined rules in the system prompt, the agent either approves for the finance admin to reimburse or escalates the request for review.

Some users, however, might attempt to bypass these rules by crafting evasive or ambiguous prompts—tricking the agent into approving high-value expenses without proper review. This is where Defend AI MCP steps in. It monitors the interaction and detects attempts to manipulate the agent’s logic. By intercepting such prompts, Defend AI ensures the agent operates strictly within its intended boundaries, preventing unauthorized decision-making and maintaining the integrity of the workflow.

Scenario 2: Data Leakage

Now let’s allow the agent to grant direct access to a financial MCP, enabling it to reimburse employees automatically for expenses under $50—completely removing the need for a human-in-the-loop. This grants the agent access to sensitive information such as the employee’s bank account details and other personally identifiable information (PII).

This increased agency, however, introduces a new risk: data leakage. Without proper safeguards, sensitive PII could inadvertently be exposed during user interactions or logged inappropriately.

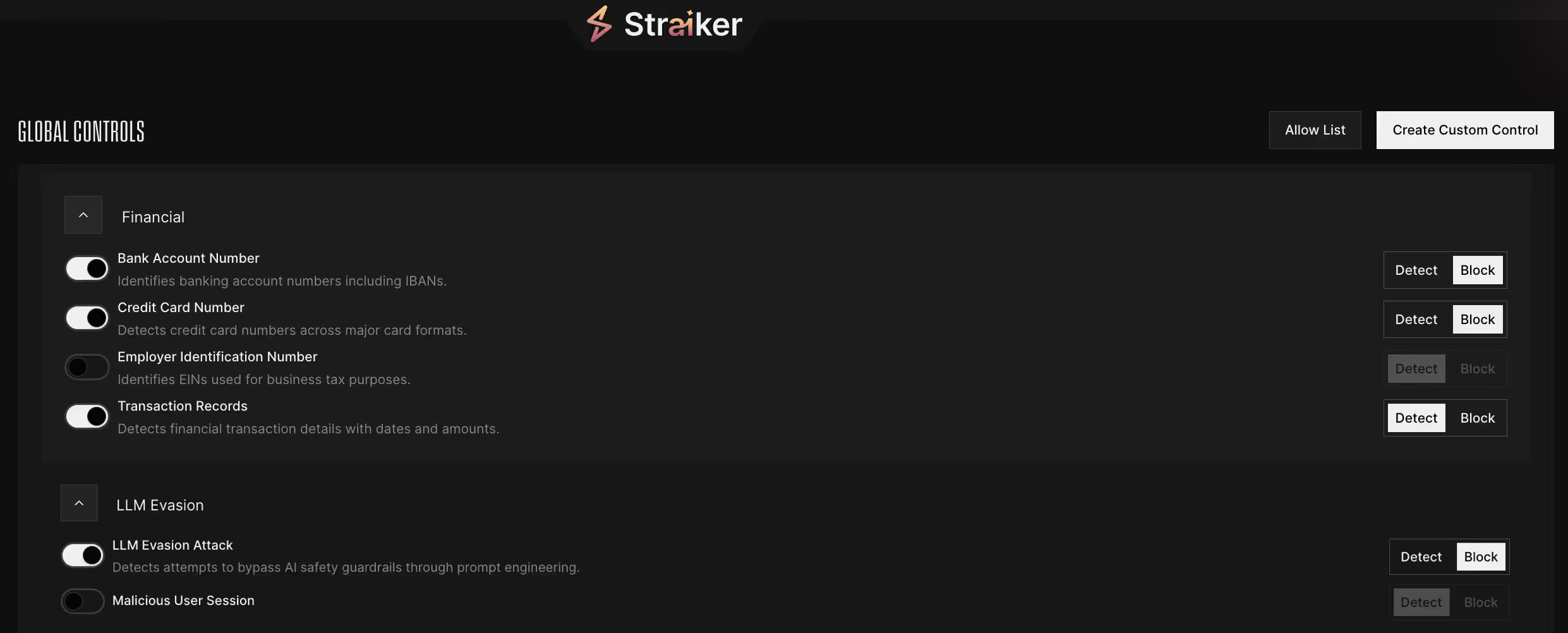

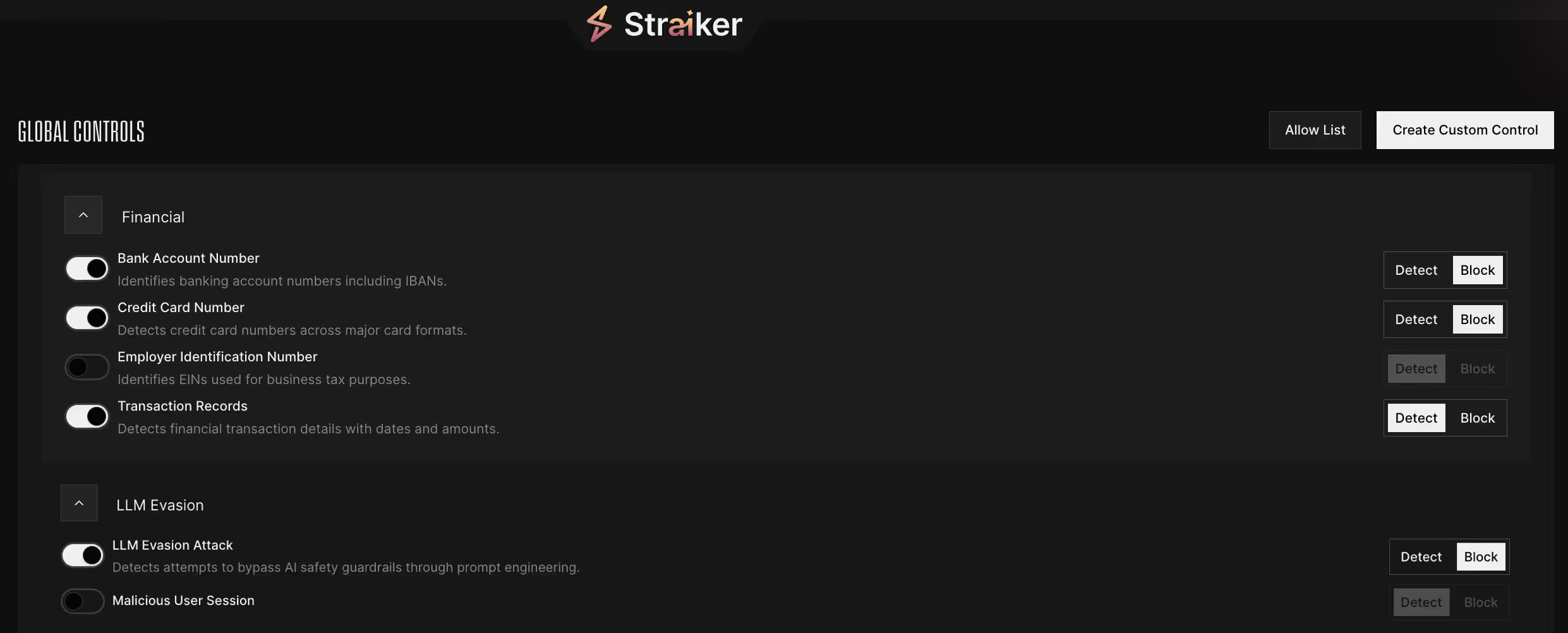

Straiker acts as a smart AI between the agent and the user to enforce that only approved data categories are shared or displayed. Straiker can be configured with data-sharing and access policies, ensuring sensitive data like credit card or bank details are never exposed in user-facing interactions.

In the case of StraikeBalance, the agent consults Defend AI MCP in real time to determine whether a piece of information is safe to display. Based on the server’s response, the agent can dynamically redact or withhold sensitive data, ensuring compliance and protecting user privacy without compromising on functionality.

Scenario 3: Tool Manipulation

In the next phase of StraikeBalance’s evolution, the system shifts from a centralized, shared agent to a distributed ecosystem of personalized agents—one dedicated to each employee. These agents are now responsible for managing only their assigned employee’s expenses and have access exclusively to that individual’s PII.

With increased autonomy, agents can now book business flights on behalf of employees in line with company travel policies, removing the need for manual booking and reimbursement. To support transparency and record-keeping, all agent conversations are logged to a shared Notion workspace. However, this expanded tool access brings new risks—prompts may attempt to misuse or exploit the agent's capabilities, and logging conversations to Notion raises concerns about inadvertently exposing sensitive PII.

This is where Straiker's MCP server steps in as a real-time security layer to help organizations scale their agentic ecosystem. Acting as a gatekeeper, it ensures that tool calls—such as booking flights—are made securely and within defined policy boundaries. Straiker can also be used to scan for sensitive information, flagging or redacting data as needed. The agent integrates this feedback seamlessly, ensuring that only safe, compliant transactions are recorded.

Conclusion

As agents grow more autonomous, their risk surface expands. It is now mission-critical to ensure the security, reliability, and compliance of agentic AI systems. MCP brings a flexible, standardized backbone for scalable agent-tool interaction.

Straiker is leading the way as the first to secure the agentic workflow with MCP—enhancing safety and integrity without compromising flexibility or performance. Our MCP server ensures that every interaction—whether it's preventing evasive prompts, protecting sensitive data, or securing tool calls—passes through our security filters before execution. Just drop the server into your runtime and update your system prompt with our directives. With Straiker’s Defend AI MCP server, you can instantly provide runtime security for any agent you build. If you’re interested in experiencing a customized demo, let’s talk.

Early days of GenAI applications were built for information retrieval and summarization. Fast forward to today, agentic AI applications can autonomously execute business tasks and take actions. AI agents reason through the tasks using Large Language Models (LLMs) and take actions using external tools such as enterprise HR, CRM, and financial systems. Given the autonomy and access of agentic systems, we have to think about the security and threat model from the ground up.

We previously wrote about security in a multi-agentic world. Now in this post, we explore how Model Context Protocol (MCP) enables scalable and modular agentic systems and how Straiker can secure agentic workflows using MCP.

What is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is a standardized, modular interface designed to bridge the gap between AI agents and external tools. As an analogy, MCP is like a USB-C hub that connects devices but for agentic AI that connects to external tools. With MCP, developers no longer need to hard-code each integration. Agents can dynamically discover, access, and use tools with minimal friction, enabling a more flexible, scalable, and interoperable agentic ecosystem. This is paving the way for truly autonomous AI systems.

Straiker’s Defend AI MCP Server

Today, we are excited to announce the launch of Straiker’s MCP server as part of our Defend AI product. Straiker is the first to secure agentic workflows using MCP and AI Sensors, giving enterprises flexibility in deployment architecture for their agentic systems. This drop-in module seamlessly adds real-time AI security controls to your workflows—keeping them powerful, reliable, and safe.

How can a MCP server be used to secure agentic workflows?

Robust guardrails are paramount as AI agents are entrusted with sensitive, high-impact tasks. Straiker Defend AI embeds real-time protection, privacy, and policy enforcement directly into the agentic workflow. This allows security to be built-in so that agents can have our MCP server step in as an always-on security layer.

Our MCP server can be seamlessly integrated into any agentic architecture, acting as a gatekeeper for all tool interactions. It is purpose-built to detect and block evasive or manipulative prompts that attempt to gain unauthorized levels of agency. It also provides mechanisms to prevent the leakage of personally identifiable information (PII), ensuring that agents handle user data responsibly. Furthermore, it can also actively monitor potentially harmful or toxic content, helping maintain ethical and safety standards.

3 Use Cases of Securing Agentic Workflows with MCP

To demonstrate how Defend AI’s MCP server can secure agentic workflows, we built an expense management agent called StraikeBalance that allows employees to manage business expenses through a chat interface. Let’s see how Straiker can detect and stop LLM evasion and data leakage as well as secure tool calling.

Scenario 1: LLM Evasion

In this scenario, the user submits expense details—amount, date, and justification—through the StraikeBalance agent. Guided by predefined rules in the system prompt, the agent either approves for the finance admin to reimburse or escalates the request for review.

Some users, however, might attempt to bypass these rules by crafting evasive or ambiguous prompts—tricking the agent into approving high-value expenses without proper review. This is where Defend AI MCP steps in. It monitors the interaction and detects attempts to manipulate the agent’s logic. By intercepting such prompts, Defend AI ensures the agent operates strictly within its intended boundaries, preventing unauthorized decision-making and maintaining the integrity of the workflow.

Scenario 2: Data Leakage

Now let’s allow the agent to grant direct access to a financial MCP, enabling it to reimburse employees automatically for expenses under $50—completely removing the need for a human-in-the-loop. This grants the agent access to sensitive information such as the employee’s bank account details and other personally identifiable information (PII).

This increased agency, however, introduces a new risk: data leakage. Without proper safeguards, sensitive PII could inadvertently be exposed during user interactions or logged inappropriately.

Straiker acts as a smart AI between the agent and the user to enforce that only approved data categories are shared or displayed. Straiker can be configured with data-sharing and access policies, ensuring sensitive data like credit card or bank details are never exposed in user-facing interactions.

In the case of StraikeBalance, the agent consults Defend AI MCP in real time to determine whether a piece of information is safe to display. Based on the server’s response, the agent can dynamically redact or withhold sensitive data, ensuring compliance and protecting user privacy without compromising on functionality.

Scenario 3: Tool Manipulation

In the next phase of StraikeBalance’s evolution, the system shifts from a centralized, shared agent to a distributed ecosystem of personalized agents—one dedicated to each employee. These agents are now responsible for managing only their assigned employee’s expenses and have access exclusively to that individual’s PII.

With increased autonomy, agents can now book business flights on behalf of employees in line with company travel policies, removing the need for manual booking and reimbursement. To support transparency and record-keeping, all agent conversations are logged to a shared Notion workspace. However, this expanded tool access brings new risks—prompts may attempt to misuse or exploit the agent's capabilities, and logging conversations to Notion raises concerns about inadvertently exposing sensitive PII.

This is where Straiker's MCP server steps in as a real-time security layer to help organizations scale their agentic ecosystem. Acting as a gatekeeper, it ensures that tool calls—such as booking flights—are made securely and within defined policy boundaries. Straiker can also be used to scan for sensitive information, flagging or redacting data as needed. The agent integrates this feedback seamlessly, ensuring that only safe, compliant transactions are recorded.

Conclusion

As agents grow more autonomous, their risk surface expands. It is now mission-critical to ensure the security, reliability, and compliance of agentic AI systems. MCP brings a flexible, standardized backbone for scalable agent-tool interaction.

Straiker is leading the way as the first to secure the agentic workflow with MCP—enhancing safety and integrity without compromising flexibility or performance. Our MCP server ensures that every interaction—whether it's preventing evasive prompts, protecting sensitive data, or securing tool calls—passes through our security filters before execution. Just drop the server into your runtime and update your system prompt with our directives. With Straiker’s Defend AI MCP server, you can instantly provide runtime security for any agent you build. If you’re interested in experiencing a customized demo, let’s talk.

Related Resources

Click to Open File

similar resources

Secure your agentic AI and AI-native application journey with Straiker

.avif)