Agentic AI Security for Developers: Embedding Autonomous Attack Simulation into CI/CD

As AI agents enter production, static security scans (SAST) can’t catch dynamic, reasoning driven risks. Learn how to integrate Autonomous Attack Simulation (AAS) into your CI/CD pipeline to test and harden agent behavior before deployment.

The Cognitive Gap in CI/CD

Developers are building applications that leverage reasoning engines. LLM-powered agents on platforms and AI assisted IDEs like Replit, Lovable, Cursor, Windsurf and Vercel can write code, trigger builds and deploy infrastructure.

Yet, our pipelines still test for traditional vulnerabilities like SQL injection, dependency CVEs, container misconfigurations while ignoring a new class of risks: behavioral vulnerabilities.

Prompt injection, unsafe tool use and data exfiltration through reasoning chains do not appear in code scans but emerge during execution.

Traditional CI/CD stops at code. Agentic AI requires testing cognition.

From DevSecOps to AgentSecOps

DevSecOps secures build, test and deploy. AgentSecOps extends that model to behavior and reasoning by validating how autonomous agents interpret instructions, share context and act on external systems.

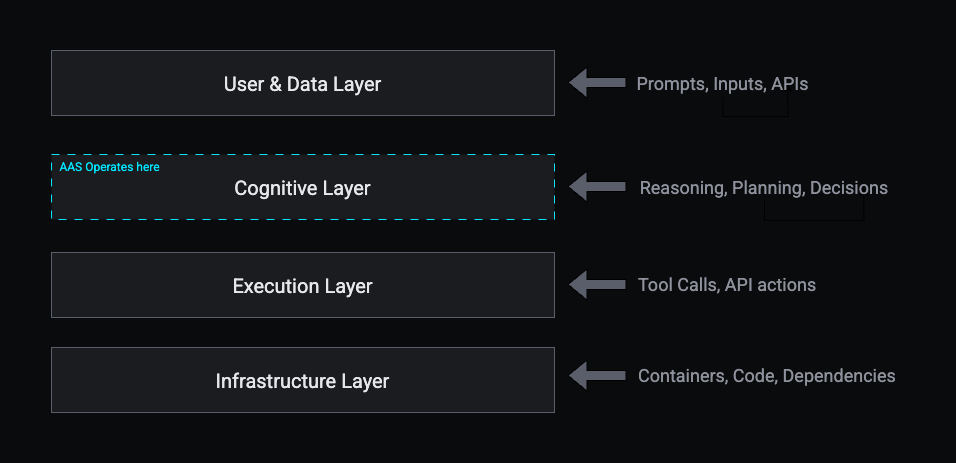

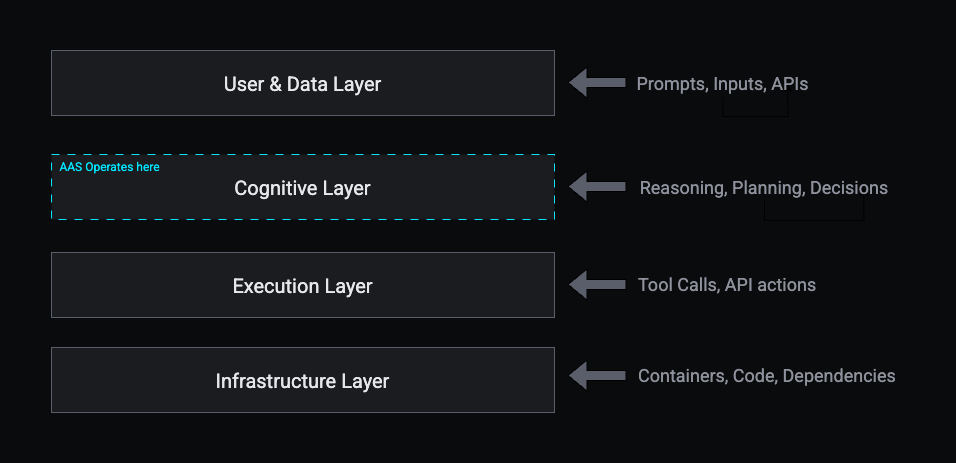

The foundation of AgentSecOps is Autonomous Attack Simulation (AAS): automated red teaming for cognitive behavior. Unlike static security tests, AAS uses adversarial agents to probe other agents continuously there by testing not what systems contain but how they think.

What is Autonomous Attack Simulation?

Autonomous Attack Simulation (AAS) extends traditional red teaming into the language and reasoning layer of software systems. Instead of human testers crafting exploits, adversarial agents automatically generate and execute attack scenarios against target agents inside a controlled sandbox environment. AAS requires understanding of how large language models process instructions and how attackers can exploit the flexible nature of natural language interfaces.

Each CI/CD run becomes a behavioral testbed to validate how an agent reacts to adversarial prompts, context poisoning or unsafe tool triggers.

Core Components

AAS differs from traditional AI red teaming: model evaluations test response safety; AAS tests system behavior like the decisions that agents make, tools they invoke and data they touch.

5 reasons Why Shift-Left Security for AI matters more than ever

The “shift-left” security principles apply to AI agents just as it does to traditional applications.

Here are 5 top reasons:

- Natural language is ambiguous by design. When apps are specified with prompts and instructions, subtle language variations can change behavior. Ambiguities are easter eggs waiting to be exploited.

- Multi-agent orchestration multiplies attack surfaces. When agents coordinate and pass context around, the boundaries blur and misplaced context or bad tools in the mix can cascade effects across the system causing autonomous chaos.

- Runtime decisions create new risks. Agents make dynamic API calls, execute transactions and surface PII. They interpret business goals and plans/reasons for tasks that can’t fully be validated before runtime.

- Tool + plugin misuse happens during execution. Agents calling tools like search, payment and CI/CD triggers can be socially engineered into unsafe behaviors. A thoroughly vetted external tool can change its behavior without changing its API contract. Unlike traditional applications, these behaviors are fed straight into agentic reasoning.

- Memory and context persistence leaks across sessions. What was “safe” in one conversation can mistakenly influence another.

These are behavioral issues, not code issues and they require testing during execution.

Integrating AAS into CI/CD

AAS fits naturally as a dedicated test stage in a CI/CD pipeline just like SAST or DAST tools, but for cognitive behavior.

# AAS Integration YAML Sample

name: Agentic Security Pipeline

on:

pull_request:

branches: [main]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v5

- name: Install dependencies

run: npm ci

aas_test:

runs-on: ubuntu-latest

needs: build

steps:

- name: Run Autonomous Attack Simulation

run: aas run --config aas.yml --report reports/aas.json

deploy:

if: success()

runs-on: ubuntu-latest

needs: [build, aas_test]

steps:

- name: Deploy to staging

run: ./scripts/deploy.shHow does it work:

- CI spins up an isolated environment with all the target agents to evaluate.

- The AAS engine launches adversarial agents from a threat library.

- Multi-turn adversarial sessions probe reasoning, context and permissions.

- Behavioral telemetry is logged and scored against agent contracts.

- Failing tests block deployment and output structured reports.

- Continuous monitoring provides insights into agentic behavioral drift from one build to another.

Think of it as fuzz testing for cognition. We are probing the decision/reasoning paths instead of code paths.

Defining Agent Contracts

Agent contracts define agent boundaries including its tools, data scopes and reasoning limits. They serve as the least privilege manifests for autonomy.

# Agent Contract YAML Sample

agent: "research-assistant"

permissions:

tools:

- name: "search"

allow: ["read"]

- name: "codegen"

allow: ["generate"]

data:

- path: "customer/*"

allow: ["read"]

- path: "finance/*"

allow: []

constraints:

reasoning_depth: 5

memory_scope: "session"During simulation, AAS doesn’t just test single-agent prompts, but agent ecosystems and validates that:

- Agents stay within defined permissions.

- Context doesn’t leak across sessions or agents.

- Tools are invoked with proper authorization.

- Memory remains scope to its intended use.

If an adversarial prompt induces a contract violation, the pipeline flags it immediately.

Why Multi-Agent Systems Demand Continuous Attack Simulation

Modern architectures use multi-agent orchestration via protocols like the MCP (Model Context Protocol), A2A (Agent to Agent) or frameworks like Agentic Retrieval Augment Generation (Agentic RAG), Context or Cache Augmented Generation (CAG). These systems share context, delegate authority, and act autonomously thereby expanding the attack surface in ways static analysis can’t see. These systems can asynchronously drift with updates to data and configurations which are not necessarily through traditional code and deployments.

AAS detects this cascade by:

- Tracing reasoning lineage across agents

- Verifying contract adherence at each node

- Surfacing the exact conversation that led to failure.

This means security can no longer rely on one-time audits. It now requires continuous, real-time assessments of AI agent behavior, shifting testing from functional validation to full system behavioral validation.

Measuring Behavioral Security

Behavioral testing demands measurable outcomes that can be incorporated into CI/CD pipelines. AAS enables quantitative tracking similar to unit-test coverage. Here are some metrics to look at for empirical validation.

These metrics make cognitive security observable and therefore improvable.

How to Get Started

Teams can begin with small, incremental adoption:

- Identify critical agents: those with data or operational authority can take precedence.

- Write Contracts: define allowed tools and data scopes.

- Add an AAS test stage: integrate into existing CI/CD jobs.

- Record incidents: feed production anomalies back as new adversarial tests

- Automate enforcement: add runtime guardrails for high risk operations.

Over time, this should become a self-reinforcing feedback loop. Each detected exploit expands the test corpus, continuously hardening the system behavior.

The future: AgentSecOps

As software gains autonomy, DevSecOps must evolve into AgentSecOps - a discipline focused on securing cognition, not just code.

“Just as chaos engineering improved reliability through controlled failure, Autonomous Attack Simulation improves safety through controlled deception"

Every commit becomes a behavioral rehearsal which is a chance to catch unsafe reasoning before it reaches users.

In Closing

The next security boundary isn’t in your codebase, it is in your agents’ decision making. Pipelines must validate how systems think, not just what they contain.

Autonomous Attack Simulation offers a path forward: continuous, automated red teaming embedded directly into CI/CD.

At Straiker, we are building the tools that operationalize this vision - agentic security that continuously tests, observes and reinforces safe AI behavior across build, deploy and runtime.

“Test cognition before it ships. That’s the new security frontier”

Learn more about Straiker’s approach here.

The Cognitive Gap in CI/CD

Developers are building applications that leverage reasoning engines. LLM-powered agents on platforms and AI assisted IDEs like Replit, Lovable, Cursor, Windsurf and Vercel can write code, trigger builds and deploy infrastructure.

Yet, our pipelines still test for traditional vulnerabilities like SQL injection, dependency CVEs, container misconfigurations while ignoring a new class of risks: behavioral vulnerabilities.

Prompt injection, unsafe tool use and data exfiltration through reasoning chains do not appear in code scans but emerge during execution.

Traditional CI/CD stops at code. Agentic AI requires testing cognition.

From DevSecOps to AgentSecOps

DevSecOps secures build, test and deploy. AgentSecOps extends that model to behavior and reasoning by validating how autonomous agents interpret instructions, share context and act on external systems.

The foundation of AgentSecOps is Autonomous Attack Simulation (AAS): automated red teaming for cognitive behavior. Unlike static security tests, AAS uses adversarial agents to probe other agents continuously there by testing not what systems contain but how they think.

What is Autonomous Attack Simulation?

Autonomous Attack Simulation (AAS) extends traditional red teaming into the language and reasoning layer of software systems. Instead of human testers crafting exploits, adversarial agents automatically generate and execute attack scenarios against target agents inside a controlled sandbox environment. AAS requires understanding of how large language models process instructions and how attackers can exploit the flexible nature of natural language interfaces.

Each CI/CD run becomes a behavioral testbed to validate how an agent reacts to adversarial prompts, context poisoning or unsafe tool triggers.

Core Components

AAS differs from traditional AI red teaming: model evaluations test response safety; AAS tests system behavior like the decisions that agents make, tools they invoke and data they touch.

5 reasons Why Shift-Left Security for AI matters more than ever

The “shift-left” security principles apply to AI agents just as it does to traditional applications.

Here are 5 top reasons:

- Natural language is ambiguous by design. When apps are specified with prompts and instructions, subtle language variations can change behavior. Ambiguities are easter eggs waiting to be exploited.

- Multi-agent orchestration multiplies attack surfaces. When agents coordinate and pass context around, the boundaries blur and misplaced context or bad tools in the mix can cascade effects across the system causing autonomous chaos.

- Runtime decisions create new risks. Agents make dynamic API calls, execute transactions and surface PII. They interpret business goals and plans/reasons for tasks that can’t fully be validated before runtime.

- Tool + plugin misuse happens during execution. Agents calling tools like search, payment and CI/CD triggers can be socially engineered into unsafe behaviors. A thoroughly vetted external tool can change its behavior without changing its API contract. Unlike traditional applications, these behaviors are fed straight into agentic reasoning.

- Memory and context persistence leaks across sessions. What was “safe” in one conversation can mistakenly influence another.

These are behavioral issues, not code issues and they require testing during execution.

Integrating AAS into CI/CD

AAS fits naturally as a dedicated test stage in a CI/CD pipeline just like SAST or DAST tools, but for cognitive behavior.

# AAS Integration YAML Sample

name: Agentic Security Pipeline

on:

pull_request:

branches: [main]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v5

- name: Install dependencies

run: npm ci

aas_test:

runs-on: ubuntu-latest

needs: build

steps:

- name: Run Autonomous Attack Simulation

run: aas run --config aas.yml --report reports/aas.json

deploy:

if: success()

runs-on: ubuntu-latest

needs: [build, aas_test]

steps:

- name: Deploy to staging

run: ./scripts/deploy.shHow does it work:

- CI spins up an isolated environment with all the target agents to evaluate.

- The AAS engine launches adversarial agents from a threat library.

- Multi-turn adversarial sessions probe reasoning, context and permissions.

- Behavioral telemetry is logged and scored against agent contracts.

- Failing tests block deployment and output structured reports.

- Continuous monitoring provides insights into agentic behavioral drift from one build to another.

Think of it as fuzz testing for cognition. We are probing the decision/reasoning paths instead of code paths.

Defining Agent Contracts

Agent contracts define agent boundaries including its tools, data scopes and reasoning limits. They serve as the least privilege manifests for autonomy.

# Agent Contract YAML Sample

agent: "research-assistant"

permissions:

tools:

- name: "search"

allow: ["read"]

- name: "codegen"

allow: ["generate"]

data:

- path: "customer/*"

allow: ["read"]

- path: "finance/*"

allow: []

constraints:

reasoning_depth: 5

memory_scope: "session"During simulation, AAS doesn’t just test single-agent prompts, but agent ecosystems and validates that:

- Agents stay within defined permissions.

- Context doesn’t leak across sessions or agents.

- Tools are invoked with proper authorization.

- Memory remains scope to its intended use.

If an adversarial prompt induces a contract violation, the pipeline flags it immediately.

Why Multi-Agent Systems Demand Continuous Attack Simulation

Modern architectures use multi-agent orchestration via protocols like the MCP (Model Context Protocol), A2A (Agent to Agent) or frameworks like Agentic Retrieval Augment Generation (Agentic RAG), Context or Cache Augmented Generation (CAG). These systems share context, delegate authority, and act autonomously thereby expanding the attack surface in ways static analysis can’t see. These systems can asynchronously drift with updates to data and configurations which are not necessarily through traditional code and deployments.

AAS detects this cascade by:

- Tracing reasoning lineage across agents

- Verifying contract adherence at each node

- Surfacing the exact conversation that led to failure.

This means security can no longer rely on one-time audits. It now requires continuous, real-time assessments of AI agent behavior, shifting testing from functional validation to full system behavioral validation.

Measuring Behavioral Security

Behavioral testing demands measurable outcomes that can be incorporated into CI/CD pipelines. AAS enables quantitative tracking similar to unit-test coverage. Here are some metrics to look at for empirical validation.

These metrics make cognitive security observable and therefore improvable.

How to Get Started

Teams can begin with small, incremental adoption:

- Identify critical agents: those with data or operational authority can take precedence.

- Write Contracts: define allowed tools and data scopes.

- Add an AAS test stage: integrate into existing CI/CD jobs.

- Record incidents: feed production anomalies back as new adversarial tests

- Automate enforcement: add runtime guardrails for high risk operations.

Over time, this should become a self-reinforcing feedback loop. Each detected exploit expands the test corpus, continuously hardening the system behavior.

The future: AgentSecOps

As software gains autonomy, DevSecOps must evolve into AgentSecOps - a discipline focused on securing cognition, not just code.

“Just as chaos engineering improved reliability through controlled failure, Autonomous Attack Simulation improves safety through controlled deception"

Every commit becomes a behavioral rehearsal which is a chance to catch unsafe reasoning before it reaches users.

In Closing

The next security boundary isn’t in your codebase, it is in your agents’ decision making. Pipelines must validate how systems think, not just what they contain.

Autonomous Attack Simulation offers a path forward: continuous, automated red teaming embedded directly into CI/CD.

At Straiker, we are building the tools that operationalize this vision - agentic security that continuously tests, observes and reinforces safe AI behavior across build, deploy and runtime.

“Test cognition before it ships. That’s the new security frontier”

Learn more about Straiker’s approach here.

Related Resources

Click to Open File

similar resources

Secure your agentic AI and AI-native application journey with Straiker

.avif)

.png)