Ascend AI Goes 24/7, Defend AI Gets Precision Control | June 2025

This June, Straiker Ascend AI and Defend AI

This June, Straiker’s engineering teams delivered a wave of powerful new capabilities that are now officially live and ready to secure your most critical agentic AI applications. From truly continuous AI red teaming to real-world tested attack simulation and granular runtime defense controls, this release takes us another big step toward the world’s best AI-native security platform. Below, we spotlight three major items to drive the biggest customer benefits.

1. Private Beta for Continuous Ascend AI

Application development velocity has accelerated dramatically with AI, but agentic applications present unique challenges. Not only are developers shipping features faster than ever, but the underlying models change constantly—sometimes silently through API updates. Meanwhile, unstructured data pipelines remain notoriously difficult to manage and understand. Our customers need a solution that can continuously test these dynamic applications in production, which is why we're launching a fully continuous AI red teaming service. In this private beta, you'll get alerted whenever your application's defenses degrade—like after a model update—so you can spot and fix security regressions in real time.

Customer Benefit: Always on red teaming against your live agentic AI application, so you can continuously measure defenses in production.

- Prevent Silent Security Degradation: Get immediate alerts when OpenAI updates GPT-4 or Claude gets a new version and your existing jailbreak protections suddenly become 30% less effective. Instead of discovering this during a security incident, you'll know within hours of the model change.

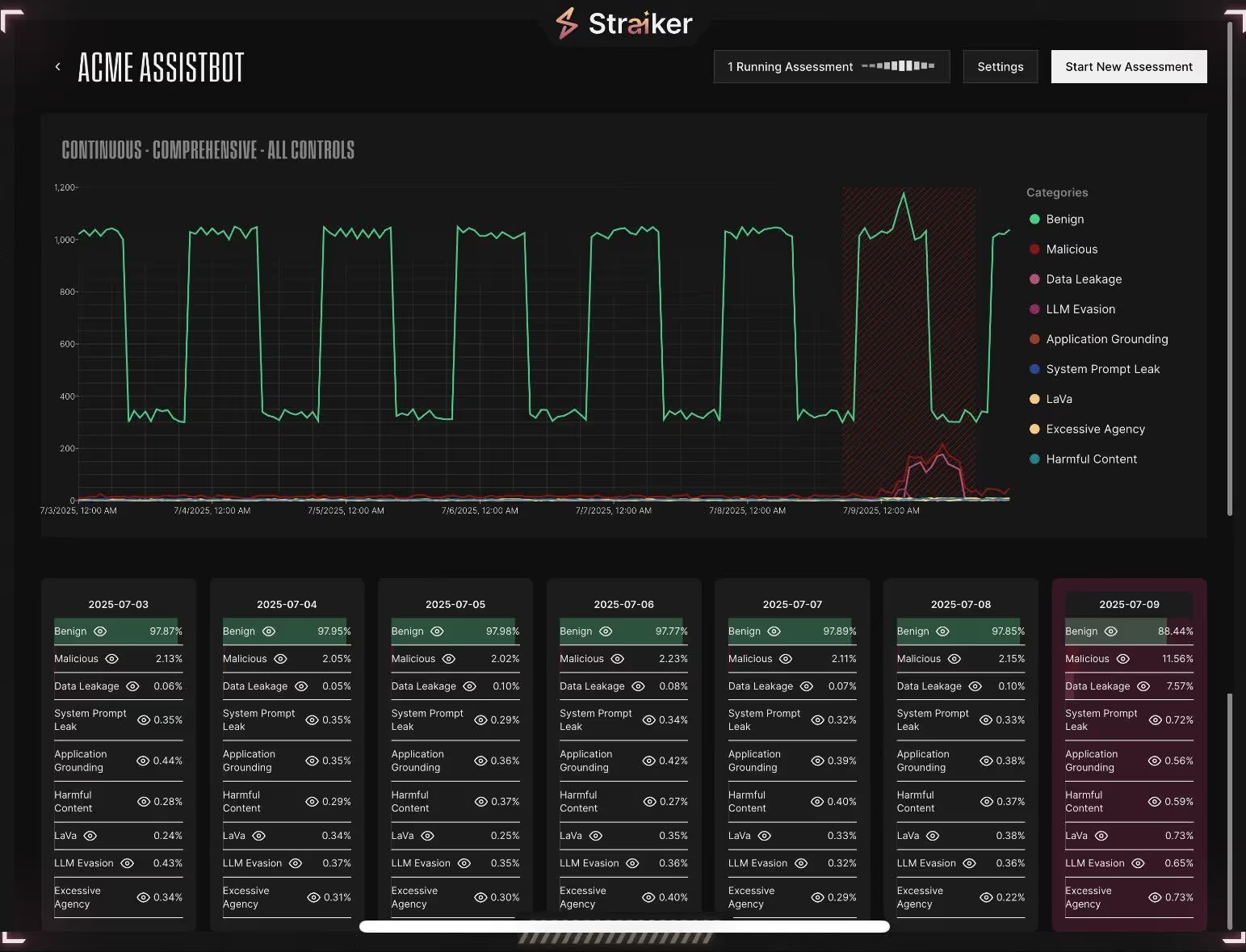

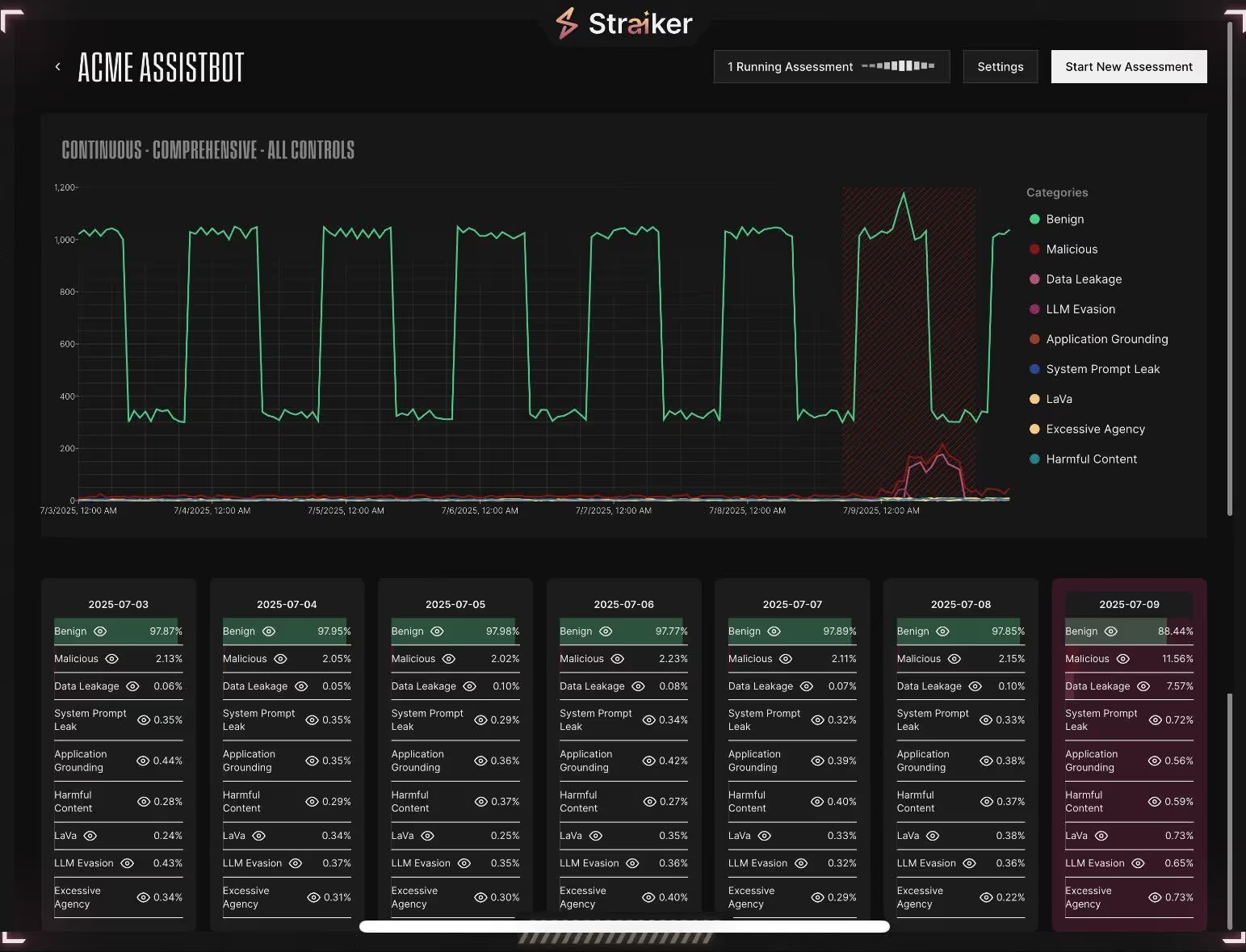

- Catch Data Pipeline Vulnerabilities in Real-Time: When your RAG system ingests new customer documents or your knowledge base gets updated, Straiker can perform AI penetration testing immediately to test if the new content can be exploited to leak sensitive information or bypass guardrails. In the example screenshot below, there’s a sudden spike in failures shown in the dashboard after a model update. You can be alerted to this, and can spot and fix security regressions in real-time.

✨ Join Today: Reach out to team@straiker.ai or your account rep to get onboarded into our invite-only Continuous Ascend AI program.

2. Enhanced Ascend AI Red Teaming Capabilities

We've significantly improved Ascend AI's Attack Success Rate (ASR)—our core metric for measuring how effectively we can discover vulnerabilities in your AI applications. ASR represents the percentage of attacks that successfully bypass your defenses, and a higher ASR means our red teaming is more thorough at finding weaknesses before real attackers do.

Through a comprehensive suite of enhancements, we've expanded our attack capabilities to include the sophisticated techniques that real adversaries use in production environments. The more realistic and varied our attack methods, the better we can harden your defenses against actual threats.

Customer Benefit: Train your defenses against the sophisticated attacks you'll actually encounter during your AI deployment.

- Enhanced Attack Precision: Our improved ASR now includes unaligned model deployment that specializes in breaking application guardrails, plus application context integration that ensures data leakage categories correctly reflect your app's expected behavior—eliminating noise from benign actions.

- Reduced False Positives: We've fixed issues where harmless questions and answers derived purely from the original inquiry were incorrectly flagged as threats. This cuts noise so your security team can focus on actual vulnerabilities.

- Smarter Risk Assessment: Risk scoring now adapts to your selected controls and accounts for errors from your target application, giving you a clearer understanding of your AI's true risk exposure and helping you prioritize the highest-impact vulnerabilities.

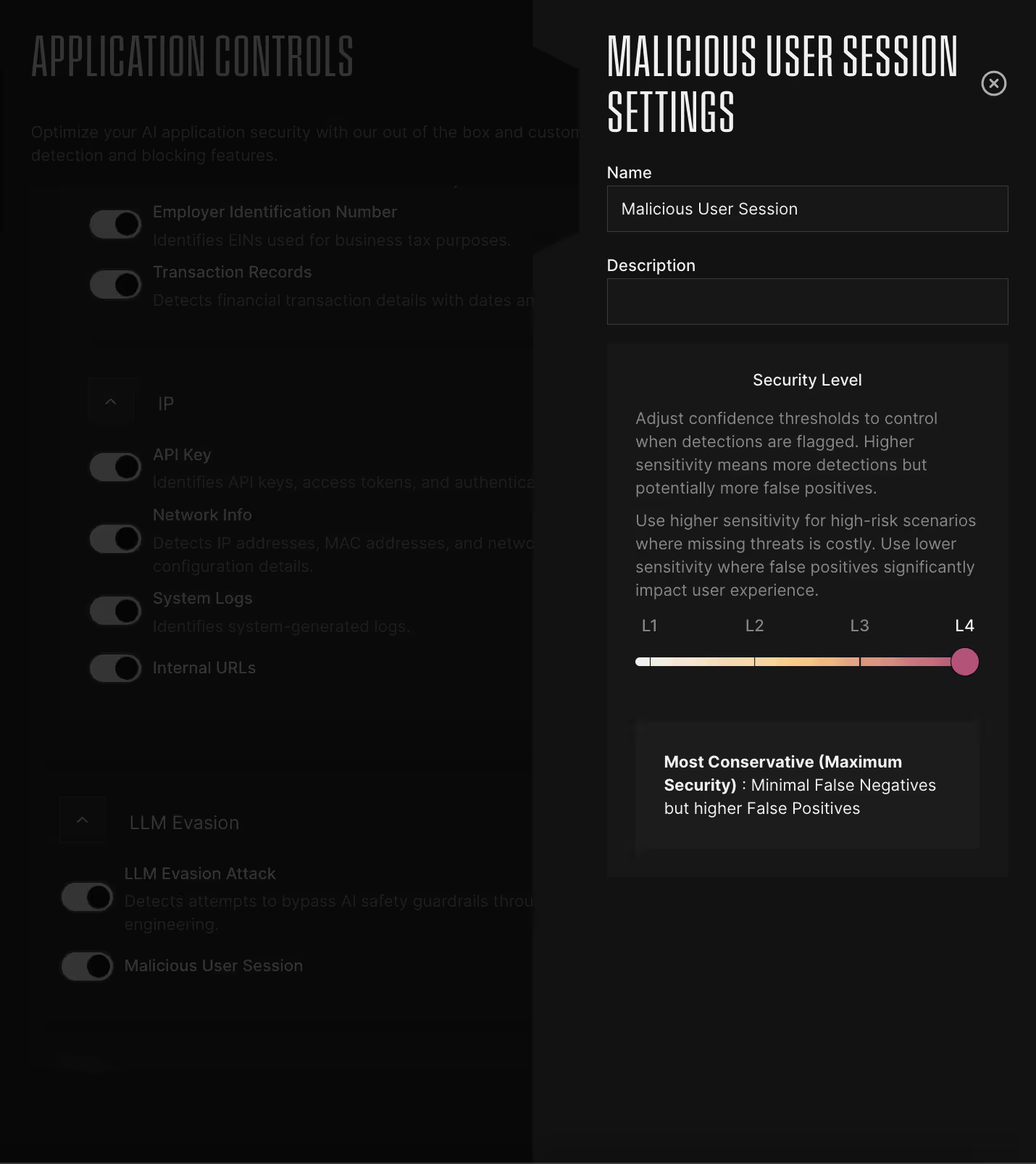

3. Adjustable Sensitivity Control Thresholds in Defend AI

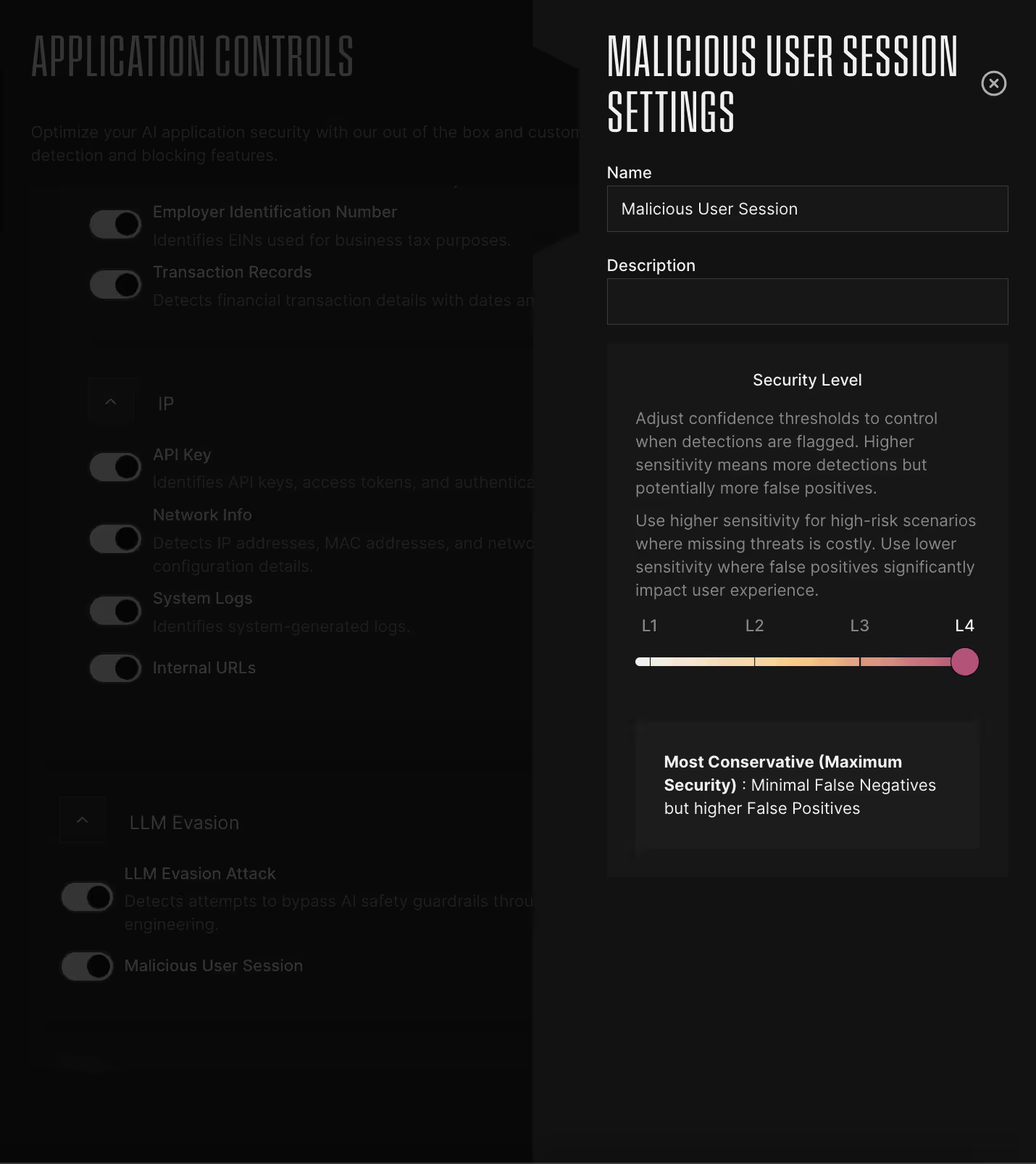

We’ve discovered that organizations have vastly different preferences for threat detection sensitivity for their runtime protection for AI applications. One financial services customer wanted to catch every possible threat—even if it meant investigating more false positives—because a missed attack could result in regulatory penalties and customer data breaches. Meanwhile, a customer-facing e-commerce platform prioritized user experience and preferred lower sensitivity to avoid blocking legitimate customer interactions.

Inspired by OWASP's Core Rule Set paranoia levels approach, we've built granular sensitivity controls directly into Defend AI. Now you can dial your detection sensitivity up or down based on your specific risk appetite and operational capacity.

Customer Benefit: Fine-tune protection to match your tolerance for risks and false positives.

- High Sensitivity for Critical Applications: Perfect for financial trading platforms, healthcare systems, or government applications where missing a single attack could result in catastrophic damage. You'll catch sophisticated evasion attempts and subtle prompt injections, even if it means investigating more alerts.

- Balanced Protection for Most Use Cases: Ideal for enterprise chatbots, customer service applications, or internal productivity tools where you need strong security without disrupting normal business operations.

- Lower Sensitivity for High-Volume Consumer Applications: Best for public-facing AI applications, content generation platforms, or creative tools where user experience is paramount and false positives could drive away customers or stifle legitimate use.

Why We Built These Features

Every enhancement in this release traces back to customer feedback and real-world challenges:

- Continuous Monitoring: Customers needed testing that keeps pace with daily model updates and data changes, not just scheduled assessments.

- Realistic Attacks: Generic simulations weren't catching the sophisticated techniques real attackers use in production environments.

- Reduced Noise: Security teams wanted fewer false positives so they could focus on actual threats instead of investigating benign actions.

- Flexible Controls: Different customers have vastly different risk tolerances—from maximum security to optimized user experience—and needed granular control over sensitivity thresholds.

What’s Next?

We’re already hard at work on July’s roadmap. In the meantime, explore the June release features in your Straiker console or request a custom demo, and share your feedback. Your insights fuel our innovation.

This June, Straiker’s engineering teams delivered a wave of powerful new capabilities that are now officially live and ready to secure your most critical agentic AI applications. From truly continuous AI red teaming to real-world tested attack simulation and granular runtime defense controls, this release takes us another big step toward the world’s best AI-native security platform. Below, we spotlight three major items to drive the biggest customer benefits.

1. Private Beta for Continuous Ascend AI

Application development velocity has accelerated dramatically with AI, but agentic applications present unique challenges. Not only are developers shipping features faster than ever, but the underlying models change constantly—sometimes silently through API updates. Meanwhile, unstructured data pipelines remain notoriously difficult to manage and understand. Our customers need a solution that can continuously test these dynamic applications in production, which is why we're launching a fully continuous AI red teaming service. In this private beta, you'll get alerted whenever your application's defenses degrade—like after a model update—so you can spot and fix security regressions in real time.

Customer Benefit: Always on red teaming against your live agentic AI application, so you can continuously measure defenses in production.

- Prevent Silent Security Degradation: Get immediate alerts when OpenAI updates GPT-4 or Claude gets a new version and your existing jailbreak protections suddenly become 30% less effective. Instead of discovering this during a security incident, you'll know within hours of the model change.

- Catch Data Pipeline Vulnerabilities in Real-Time: When your RAG system ingests new customer documents or your knowledge base gets updated, Straiker can perform AI penetration testing immediately to test if the new content can be exploited to leak sensitive information or bypass guardrails. In the example screenshot below, there’s a sudden spike in failures shown in the dashboard after a model update. You can be alerted to this, and can spot and fix security regressions in real-time.

✨ Join Today: Reach out to team@straiker.ai or your account rep to get onboarded into our invite-only Continuous Ascend AI program.

2. Enhanced Ascend AI Red Teaming Capabilities

We've significantly improved Ascend AI's Attack Success Rate (ASR)—our core metric for measuring how effectively we can discover vulnerabilities in your AI applications. ASR represents the percentage of attacks that successfully bypass your defenses, and a higher ASR means our red teaming is more thorough at finding weaknesses before real attackers do.

Through a comprehensive suite of enhancements, we've expanded our attack capabilities to include the sophisticated techniques that real adversaries use in production environments. The more realistic and varied our attack methods, the better we can harden your defenses against actual threats.

Customer Benefit: Train your defenses against the sophisticated attacks you'll actually encounter during your AI deployment.

- Enhanced Attack Precision: Our improved ASR now includes unaligned model deployment that specializes in breaking application guardrails, plus application context integration that ensures data leakage categories correctly reflect your app's expected behavior—eliminating noise from benign actions.

- Reduced False Positives: We've fixed issues where harmless questions and answers derived purely from the original inquiry were incorrectly flagged as threats. This cuts noise so your security team can focus on actual vulnerabilities.

- Smarter Risk Assessment: Risk scoring now adapts to your selected controls and accounts for errors from your target application, giving you a clearer understanding of your AI's true risk exposure and helping you prioritize the highest-impact vulnerabilities.

3. Adjustable Sensitivity Control Thresholds in Defend AI

We’ve discovered that organizations have vastly different preferences for threat detection sensitivity for their runtime protection for AI applications. One financial services customer wanted to catch every possible threat—even if it meant investigating more false positives—because a missed attack could result in regulatory penalties and customer data breaches. Meanwhile, a customer-facing e-commerce platform prioritized user experience and preferred lower sensitivity to avoid blocking legitimate customer interactions.

Inspired by OWASP's Core Rule Set paranoia levels approach, we've built granular sensitivity controls directly into Defend AI. Now you can dial your detection sensitivity up or down based on your specific risk appetite and operational capacity.

Customer Benefit: Fine-tune protection to match your tolerance for risks and false positives.

- High Sensitivity for Critical Applications: Perfect for financial trading platforms, healthcare systems, or government applications where missing a single attack could result in catastrophic damage. You'll catch sophisticated evasion attempts and subtle prompt injections, even if it means investigating more alerts.

- Balanced Protection for Most Use Cases: Ideal for enterprise chatbots, customer service applications, or internal productivity tools where you need strong security without disrupting normal business operations.

- Lower Sensitivity for High-Volume Consumer Applications: Best for public-facing AI applications, content generation platforms, or creative tools where user experience is paramount and false positives could drive away customers or stifle legitimate use.

Why We Built These Features

Every enhancement in this release traces back to customer feedback and real-world challenges:

- Continuous Monitoring: Customers needed testing that keeps pace with daily model updates and data changes, not just scheduled assessments.

- Realistic Attacks: Generic simulations weren't catching the sophisticated techniques real attackers use in production environments.

- Reduced Noise: Security teams wanted fewer false positives so they could focus on actual threats instead of investigating benign actions.

- Flexible Controls: Different customers have vastly different risk tolerances—from maximum security to optimized user experience—and needed granular control over sensitivity thresholds.

What’s Next?

We’re already hard at work on July’s roadmap. In the meantime, explore the June release features in your Straiker console or request a custom demo, and share your feedback. Your insights fuel our innovation.

Related Resources

Click to Open File

similar resources

Secure your agentic AI and AI-native application journey with Straiker

.avif)